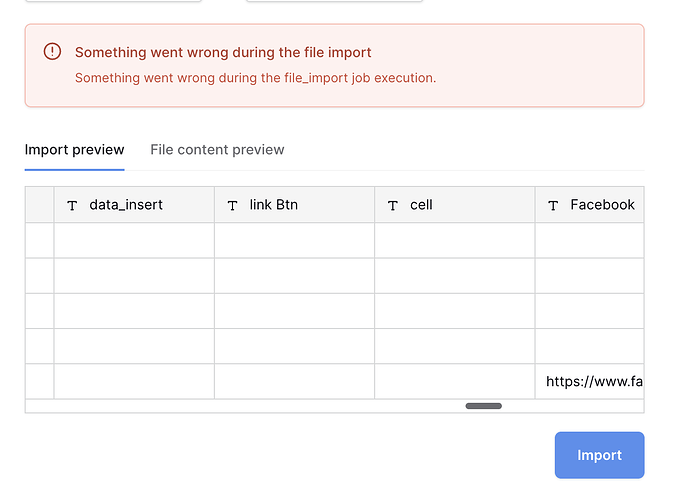

I didn’t had this issue before.

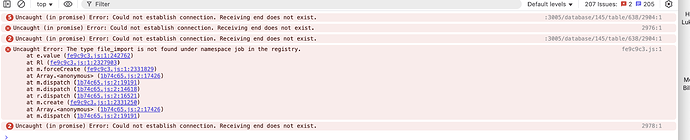

bakcend logs;

a78-e35e-4f2c-bd95-a9f43018d640] received

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] [2025-03-18 01:43:16,721: ERROR/ForkPoolWorker-1] Task baserow.core.jobs.tasks.run_async_job[05342a78-e35e-4f2c-bd95-a9f43018d640] raised unexpected: IndexError(‘list index out of range’)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] Traceback (most recent call last):

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/venv/lib/python3.11/site-packages/celery/app/trace.py”, line 453, in trace_task

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] R = retval = fun(*args, **kwargs)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/venv/lib/python3.11/site-packages/celery/app/trace.py”, line 736, in protected_call

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] return self.run(*args, **kwargs)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/jobs/tasks.py”, line 38, in run_async_job

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] JobHandler.run(job)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/jobs/handler.py”, line 76, in run

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] out = job_type.run(job, progress)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/file_import/job_types.py”, line 177, in run

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ).do(

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/rows/actions.py”, line 272, in do

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] created_rows, error_report = RowHandler().import_rows(

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 73, in _wrapper

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] raise ex

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 69, in _wrapper

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] result = wrapped_func(*args, **kwargs)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/rows/handler.py”, line 1602, in import_rows

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] created_rows, creation_report = self.create_rows_by_batch(

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 73, in _wrapper

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] raise ex

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 69, in _wrapper

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] result = wrapped_func(*args, **kwargs)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/rows/handler.py”, line 1473, in create_rows_by_batch

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] created_rows, creation_report = self.create_rows(

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 73, in _wrapper

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] raise ex

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 69, in _wrapper

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] result = wrapped_func(*args, **kwargs)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/rows/handler.py”, line 1270, in create_rows

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] return self.force_create_rows(

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 73, in _wrapper

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] raise ex

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 69, in _wrapper

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] result = wrapped_func(*args, **kwargs)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/rows/handler.py”, line 1206, in force_create_rows

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] rows_created.send(

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/venv/lib/python3.11/site-packages/django/dispatch/dispatcher.py”, line 189, in send

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] response = receiver(signal=self, sender=sender, **named)

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/fields/notification_types.py”, line 375, in notify_users_when_rows_created

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] UserMentionInRichTextFieldNotificationType.create_notifications_grouped_by_user(

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/fields/notification_types.py”, line 349, in create_notifications_grouped_by_user

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] for field, row, user_ids_to_notify in iterator:

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] File “/baserow/backend/src/baserow/contrib/database/fields/notification_types.py”, line 229, in _iter_field_row_and_users_to_notify

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] model = rows[0]._meta.model

2025-03-17 21:43:16 [EXPORT_WORKER][2025-03-18 01:43:16] ~~~~^^^

2025-03-17 21:43:16 [BACKEND][2025-03-18 01:43:16] 192.168.65.1:0 - “POST /api/database/tables/656/import/async/ HTTP/1.1” 200

2025-03-17 21:43:20 [BEAT_WORKER][2025-03-18 01:43:20] [2025-03-18 01:43:00,015: INFO/MainProcess] Scheduler: Sending due task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email() (baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email)

2025-03-17 21:43:20 [BEAT_WORKER][2025-03-18 01:43:20] [2025-03-18 01:43:20,390: INFO/MainProcess] Scheduler: Sending due task baserow.contrib.database.fields.tasks.delete_mentions_marked_for_deletion() (baserow.contrib.database.fields.tasks.delete_mentions_marked_for_deletion)

2025-03-17 21:43:20 [CELERY_WORKER][2025-03-18 01:43:20] [2025-03-18 01:43:16,194: INFO/ForkPoolWorker-1] Task baserow.ws.tasks.broadcast_to_users[867de060-3ee5-4bc4-b611-dd72dcb83f94] succeeded in 0.01727237500017509s: None

2025-03-17 21:43:20 [EXPORT_WORKER][2025-03-18 01:43:20] IndexError: list index out of range

2025-03-17 21:43:20 [CELERY_WORKER][2025-03-18 01:43:20] [2025-03-18 01:43:20,405: INFO/MainProcess] Task baserow.contrib.database.fields.tasks.delete_mentions_marked_for_deletion[8de837e8-345d-4f97-907f-49366e0f6400] received

2025-03-17 21:43:20 [EXPORT_WORKER][2025-03-18 01:43:20] [2025-03-18 01:43:20,410: INFO/MainProcess] Task baserow_enterprise.tasks.count_all_user_source_users[1525bcc0-488c-45b6-b451-3a208389e4f2] received