and i believe its bc of i have converted text to single select column.

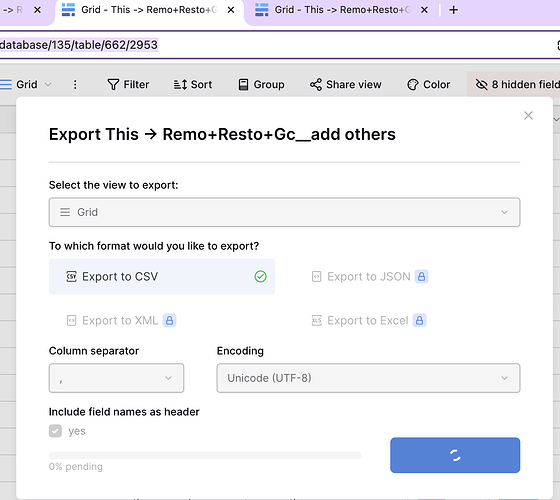

Hi @jpca999 - if the export gets stuck, please wait 30 minutes and try again. Let us know if you manage to export the data on your second attempt.

It ran for whole night and its still stuck.

It was working fine in the previous release.

If a user can’t export their work, it makes using Baserow very frustrating and unreliable.

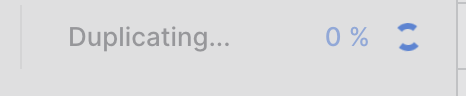

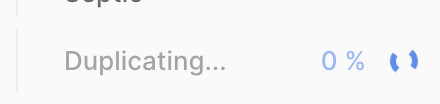

It’s still stuck.

Is there a way to manually stop the database duplication? Haven’t they set a time limit for this command to run, like they did for the export command?

I think the export isn’t working because it’s stuck duplicating the table.

**But where is the message for the user to know what’s happening? & why are things stuck & wait for how long ? **

It’s been over four hours and the database table is still duplicating! How can I stop it?

Hey @jpca999,

Are you self hosting or using the managed service?

self hosted on local host

Hey @jpca999, does it stay stuck at 0%? If so, then it’s probably because your export working is not picking up any new tasks. This can be caused by having too many export asks in the queue if you keep trying to export.

Can you please share exactly how you’re currently self-hosting, and also share our output logs?

see this is struck here for few days now.

I downloaded the repository and ran it. It showed up in Docker, and now, every time I need to run it, I just launch it from Docker on localhost:3000.

What is the way to unclog all these tasks ?

I would need to see the output logs before I know what’s going on specifically.

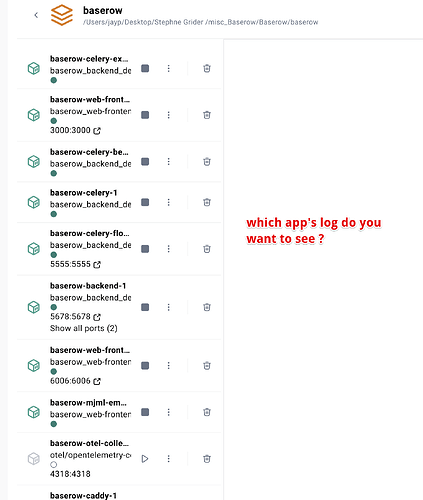

Which logs you want to see ? there are like 14 apps running on my docker.

The output logs of the Baserow you’re running.

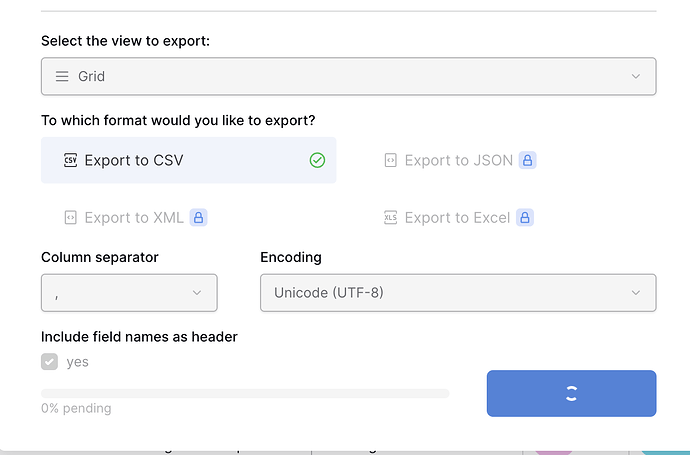

Based on the logs, it looks like no connection can be made to the Redis server. I don’t have enough information to see why it’s not starting. That would explain why the export and duplication is not working because Redis is used as broker for the async tasks.

to me it sounds like bla blal bla How to get it to work ?

To me, it sounds like you’re not given me what I’m asking for. ![]()

I can’t help you if I don’t know what’s going on. Please restart your Baserow container, and share all the output logs it’s giving you. That’s what I need to tell you how to get it to work. Otherwise, I’m blind, and can’t see what’s happening under the hood.

It would also help if you can share as many details as possible on how you’re currently self-hosting. Deployment, environment variables, etc.

I’ve restarted it several times, but it’s still stuck. There are many apps running—could you let me know which app’s log you need specifically? Or is there a way I can share all the logs compiled in one place?

Why are there FIVE backend apps running in this ?

Did you deploy this using our Helm chart? That runs in multi service mode by default so that it can be horizontally scaled. This is typical for a Kubernetes deployment.

Baserow also depends on a PostgreSQL and Redis database. How are you running those services?

It would be great to see the logs of the baserow-celery-export-worker as that one is responsible for the background jobs.

yes im running all services & im running it on local.

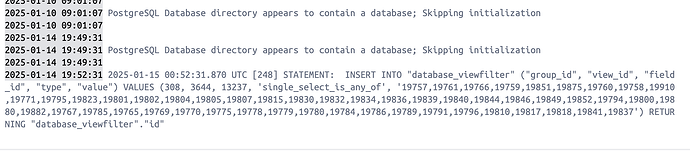

here is the logs you requested:

25-01-09 12:28:00 [2025-01-09 17:28:00,220: INFO/MainProcess] Task baserow.core.trash.tasks.mark_old_trash_for_permanent_deletion[b311aa33-fe2b-4a5b-ab79-382440f255dc] received

2025-01-09 12:28:00 [2025-01-09 17:28:00,222: INFO/MainProcess] Task baserow.core.trash.tasks.permanently_delete_marked_trash[9e1815fb-a408-4a71-9efb-d4437635c9ce] received

2025-01-09 12:28:00 [2025-01-09 17:28:00,246: WARNING/ForkPoolWorker-6] 67|2025-01-09 17:28:00.246|INFO|baserow.core.action.handler:clean_up_old_undoable_actions:306 - Cleaned up 0 actions.

2025-01-09 12:28:00 [2025-01-09 17:28:00,246: INFO/ForkPoolWorker-6] Task baserow.core.action.tasks.cleanup_old_actions[7fecc0fe-5ad2-45a1-aae0-e4830b676dcc] succeeded in 0.03490462499939895s: None

2025-01-09 12:28:00 [2025-01-09 17:28:00,271: INFO/ForkPoolWorker-7] Task baserow.core.import_export.tasks.mark_import_export_resources_for_deletion[a28571d3-b116-4cce-88d4-4eb26ba31d41] succeeded in 0.04496316699987801s: None

2025-01-09 12:28:00 [2025-01-09 17:28:00,277: INFO/ForkPoolWorker-3] Task baserow.core.jobs.tasks.clean_up_jobs[c2c85e8b-2c36-4933-8d65-81d2e5619e25] succeeded in 0.04531170800055406s: None

2025-01-09 12:28:00 [2025-01-09 17:28:00,282: WARNING/ForkPoolWorker-1] 62|2025-01-09 17:28:00.281|INFO|baserow.core.trash.handler:permanently_delete_marked_trash:305 - Successfully deleted 0 trash entries and their associated trashed items.

2025-01-09 12:28:00 [2025-01-09 17:28:00,282: WARNING/ForkPoolWorker-4] 65|2025-01-09 17:28:00.281|INFO|baserow.core.trash.handler:mark_old_trash_for_permanent_deletion:223 - Successfully marked 0 old trash items for deletion as they were older than 72 hours.

2025-01-09 12:28:00 [2025-01-09 17:28:00,284: INFO/ForkPoolWorker-1] Task baserow.core.trash.tasks.permanently_delete_marked_trash[9e1815fb-a408-4a71-9efb-d4437635c9ce] succeeded in 0.03949712499979796s: None

2025-01-09 12:28:00 [2025-01-09 17:28:00,284: INFO/ForkPoolWorker-4] Task baserow.core.trash.tasks.mark_old_trash_for_permanent_deletion[b311aa33-fe2b-4a5b-ab79-382440f255dc] succeeded in 0.041708458000357496s: None

2025-01-09 12:28:00 [2025-01-09 17:28:00,285: INFO/ForkPoolWorker-2] Task baserow.core.import_export.tasks.delete_marked_import_export_resources[0590eb7a-1dd8-41b2-b4cb-8249da847ea4] succeeded in 0.05999954099934257s: None

2025-01-09 12:29:00 [2025-01-09 17:29:00,014: INFO/MainProcess] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[7b520ced-606e-426e-b7ca-de6d88902e16] received

2025-01-09 12:29:00 [2025-01-09 17:29:00,031: INFO/MainProcess] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[f2631961-2d5f-43d0-bc4f-67ea30d98752] received

2025-01-09 12:29:00 [2025-01-09 17:29:00,031: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[7b520ced-606e-426e-b7ca-de6d88902e16] succeeded in 0.011460042000180692s: None

2025-01-09 12:29:00 [2025-01-09 17:29:00,064: INFO/ForkPoolWorker-2] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[f2631961-2d5f-43d0-bc4f-67ea30d98752] succeeded in 0.024817915999847173s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,035: INFO/MainProcess] Task baserow.contrib.database.fields.tasks.run_periodic_fields_updates[9d28119f-d1be-412c-87bb-0908ec04aaed] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,041: INFO/MainProcess] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[2473d7b7-05a2-4947-a6d4-d8d4a8049c63] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,075: INFO/MainProcess] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[8a3c6c71-88ea-4266-b94f-35e92a004205] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,075: INFO/ForkPoolWorker-2] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[2473d7b7-05a2-4947-a6d4-d8d4a8049c63] succeeded in 0.02002524999988964s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,114: INFO/ForkPoolWorker-7] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[8a3c6c71-88ea-4266-b94f-35e92a004205] succeeded in 0.03052624999963882s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,253: INFO/MainProcess] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[77623d89-fe9e-4d2c-957b-adecdf6e6f3c] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,254: INFO/MainProcess] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[ee0bb0b7-702e-4f93-8c1b-4a0b6d98dc4d] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,255: INFO/MainProcess] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[d4fab801-b85a-4dd0-b295-a6edb020360f] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,257: INFO/MainProcess] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[58c5a92b-7df3-4d5b-a0f4-e7aca077fb20] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,258: INFO/MainProcess] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[efdc40e1-11e7-4175-b968-423888049901] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,280: INFO/ForkPoolWorker-6] Task baserow.contrib.database.fields.tasks.run_periodic_fields_updates[9d28119f-d1be-412c-87bb-0908ec04aaed] succeeded in 0.23426370800007135s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,282: INFO/MainProcess] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[95d0f5fb-412c-4bf9-bf8b-ac6be8ffc926] received

2025-01-09 12:30:00 [2025-01-09 17:30:00,337: WARNING/ForkPoolWorker-6] 67|2025-01-09 17:30:00.336|INFO|baserow.contrib.database.search.handler:update_tsvector_columns:495 - Updated a unknown number of rows in table 108’s tsvs with optional field filter of [1080, 1079].

2025-01-09 12:30:00 [2025-01-09 17:30:00,337: INFO/ForkPoolWorker-6] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[95d0f5fb-412c-4bf9-bf8b-ac6be8ffc926] succeeded in 0.05137166700023954s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,366: WARNING/ForkPoolWorker-2] 63|2025-01-09 17:30:00.363|INFO|baserow.contrib.database.search.handler:update_tsvector_columns:495 - Updated a unknown number of rows in table 110’s tsvs with optional field filter of [1085].

2025-01-09 12:30:00 [2025-01-09 17:30:00,367: INFO/ForkPoolWorker-2] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[77623d89-fe9e-4d2c-957b-adecdf6e6f3c] succeeded in 0.11255808300029457s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,384: WARNING/ForkPoolWorker-7] 68|2025-01-09 17:30:00.383|INFO|baserow.contrib.database.search.handler:update_tsvector_columns:495 - Updated a unknown number of rows in table 111’s tsvs with optional field filter of [1086].

2025-01-09 12:30:00 [2025-01-09 17:30:00,384: INFO/ForkPoolWorker-7] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[ee0bb0b7-702e-4f93-8c1b-4a0b6d98dc4d] succeeded in 0.12974445800045942s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,408: WARNING/ForkPoolWorker-1] 62|2025-01-09 17:30:00.406|INFO|baserow.contrib.database.search.handler:update_tsvector_columns:495 - Updated a unknown number of rows in table 109’s tsvs with optional field filter of [1089].

2025-01-09 12:30:00 [2025-01-09 17:30:00,409: INFO/ForkPoolWorker-1] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[efdc40e1-11e7-4175-b968-423888049901] succeeded in 0.14149704200008273s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,421: WARNING/ForkPoolWorker-4] 65|2025-01-09 17:30:00.420|INFO|baserow.contrib.database.search.handler:update_tsvector_columns:495 - Updated a unknown number of rows in table 109’s tsvs with optional field filter of [1088].

2025-01-09 12:30:00 [2025-01-09 17:30:00,422: INFO/ForkPoolWorker-4] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[58c5a92b-7df3-4d5b-a0f4-e7aca077fb20] succeeded in 0.15245279200007644s: None

2025-01-09 12:30:00 [2025-01-09 17:30:00,460: WARNING/ForkPoolWorker-3] 64|2025-01-09 17:30:00.458|INFO|baserow.contrib.database.search.handler:update_tsvector_columns:495 - Updated a unknown number of rows in table 112’s tsvs with optional field filter of [1087].

2025-01-09 12:30:00 [2025-01-09 17:30:00,462: INFO/ForkPoolWorker-3] Task baserow.contrib.database.search.tasks.async_update_tsvector_columns[d4fab801-b85a-4dd0-b295-a6edb020360f] succeeded in 0.1931536669999332s: None

2025-01-09 12:31:00 [2025-01-09 17:31:00,014: INFO/MainProcess] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[271b766e-ebcf-43bb-b02b-f07887100025] received

2025-01-09 12:31:00 [2025-01-09 17:31:00,033: INFO/MainProcess] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[929452eb-a195-4f67-b331-eefbf94f9aed] received

2025-01-09 12:31:00 [2025-01-09 17:31:00,033: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[271b766e-ebcf-43bb-b02b-f07887100025] succeeded in 0.010991624999405758s: None

2025-01-09 12:31:00 [2025-01-09 17:31:00,074: INFO/ForkPoolWorker-2] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[929452eb-a195-4f67-b331-eefbf94f9aed] succeeded in 0.03170312499969441s: None

2025-01-09 12:32:00 [2025-01-09 17:32:00,035: INFO/MainProcess] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[fb246871-3d3d-42e7-994c-cfc6e66c4e25] received

2025-01-09 12:32:00 [2025-01-09 17:32:00,055: INFO/MainProcess] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[37b68805-36ab-4a05-aab4-9532062d1a01] received

2025-01-09 12:32:00 [2025-01-09 17:32:00,055: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[fb246871-3d3d-42e7-994c-cfc6e66c4e25] succeeded in 0.012075000000550062s: None

2025-01-09 12:32:00 [2025-01-09 17:32:00,087: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[37b68805-36ab-4a05-aab4-9532062d1a01] succeeded in 0.03015858299932006s: None

2025-01-09 12:32:40 [2025-01-09 17:32:40,224: INFO/MainProcess] Task baserow.contrib.database.export.tasks.clean_up_old_jobs[92543c72-d9eb-46a8-b0f9-899a0e1680a5] received

2025-01-09 12:32:40 [2025-01-09 17:32:40,242: WARNING/ForkPoolWorker-6] 67|2025-01-09 17:32:40.241|INFO|baserow.contrib.database.export.handler:clean_up_old_jobs:171 - Cleaning up 0 old jobs

2025-01-09 12:32:40 [2025-01-09 17:32:40,243: INFO/ForkPoolWorker-6] Task baserow.contrib.database.export.tasks.clean_up_old_jobs[92543c72-d9eb-46a8-b0f9-899a0e1680a5] succeeded in 0.0162880830002905s: None

2025-01-09 12:33:00 [2025-01-09 17:33:00,008: INFO/MainProcess] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[b0b29c72-27ba-4e24-9814-9442c12418d3] received

2025-01-09 12:33:00 [2025-01-09 17:33:00,012: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[b0b29c72-27ba-4e24-9814-9442c12418d3] succeeded in 0.0025270409996664966s: None

2025-01-09 12:33:00 [2025-01-09 17:33:00,012: INFO/MainProcess] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[e49b69ce-3f0e-473e-9755-58cf1ce27fb6] received

2025-01-09 12:33:00 [2025-01-09 17:33:00,024: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[e49b69ce-3f0e-473e-9755-58cf1ce27fb6] succeeded in 0.010187374999986787s: None

2025-01-09 12:33:00 [2025-01-09 17:33:00,216: INFO/MainProcess] Task baserow.core.action.tasks.cleanup_old_actions[71260989-1f61-4155-8f97-03dd5fad6808] received

2025-01-09 12:33:00 [2025-01-09 17:33:00,220: INFO/MainProcess] Task baserow.core.import_export.tasks.delete_marked_import_export_resources[5b563680-8840-4e61-936c-fa36d50de47c] received

2025-01-09 12:33:00 [2025-01-09 17:33:00,223: INFO/MainProcess] Task baserow.core.import_export.tasks.mark_import_export_resources_for_deletion[9ba30115-3731-479e-a764-1b0394bcdcdb] received

2025-01-09 12:33:00 [2025-01-09 17:33:00,226: INFO/MainProcess] Task baserow.core.jobs.tasks.clean_up_jobs[7d1c5790-d576-479e-b05a-cf01b45c62f8] received

2025-01-09 12:33:00 [2025-01-09 17:33:00,228: INFO/MainProcess] Task baserow.core.trash.tasks.mark_old_trash_for_permanent_deletion[84697d27-e4d0-4dba-a1e5-0bf4e0bc52d2] received

2025-01-09 12:33:00 [2025-01-09 17:33:00,231: INFO/MainProcess] Task baserow.core.trash.tasks.permanently_delete_marked_trash[243f7b80-aa38-45d1-bb33-d854e5b9aea7] received

2025-01-09 12:33:00 [2025-01-09 17:33:00,243: WARNING/ForkPoolWorker-6] 67|2025-01-09 17:33:00.243|INFO|baserow.core.action.handler:clean_up_old_undoable_actions:306 - Cleaned up 0 actions.

2025-01-09 12:33:00 [2025-01-09 17:33:00,244: INFO/ForkPoolWorker-6] Task baserow.core.action.tasks.cleanup_old_actions[71260989-1f61-4155-8f97-03dd5fad6808] succeeded in 0.025521790999846417s: None

2025-01-09 12:33:00 [2025-01-09 17:33:00,271: INFO/ForkPoolWorker-7] Task baserow.core.import_export.tasks.mark_import_export_resources_for_deletion[9ba30115-3731-479e-a764-1b0394bcdcdb] succeeded in 0.03676716700010729s: None

2025-01-09 12:33:00 [2025-01-09 17:33:00,281: WARNING/ForkPoolWorker-4] 65|2025-01-09 17:33:00.279|INFO|baserow.core.trash.handler:mark_old_trash_for_permanent_deletion:223 - Successfully marked 0 old trash items for deletion as they were older than 72 hours.

2025-01-09 12:33:00 [2025-01-09 17:33:00,281: INFO/ForkPoolWorker-3] Task baserow.core.jobs.tasks.clean_up_jobs[7d1c5790-d576-479e-b05a-cf01b45c62f8] succeeded in 0.040935500000159664s: None

2025-01-09 12:33:00 [2025-01-09 17:33:00,282: INFO/ForkPoolWorker-4] Task baserow.core.trash.tasks.mark_old_trash_for_permanent_deletion[84697d27-e4d0-4dba-a1e5-0bf4e0bc52d2] succeeded in 0.039826457999879494s: None

2025-01-09 12:33:00 [2025-01-09 17:33:00,282: INFO/ForkPoolWorker-2] Task baserow.core.import_export.tasks.delete_marked_import_export_resources[5b563680-8840-4e61-936c-fa36d50de47c] succeeded in 0.05009608399996068s: None

2025-01-09 12:33:00 [2025-01-09 17:33:00,285: WARNING/ForkPoolWorker-1] 62|2025-01-09 17:33:00.283|INFO|baserow.core.trash.handler:permanently_delete_marked_trash:305 - Successfully deleted 0 trash entries and their associated trashed items.

2025-01-09 12:33:00 [2025-01-09 17:33:00,287: INFO/ForkPoolWorker-1] Task baserow.core.trash.tasks.permanently_delete_marked_trash[243f7b80-aa38-45d1-bb33-d854e5b9aea7] succeeded in 0.041860332999931416s: None

2025-01-09 12:34:00 [2025-01-09 17:34:00,030: INFO/MainProcess] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[df89ad7a-e720-4691-b5dd-a0b73ca14452] received

2025-01-09 12:34:00 [2025-01-09 17:34:00,044: INFO/MainProcess] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[f80a2d5b-75be-475f-976f-5a1267314b8f] received

2025-01-09 12:34:00 [2025-01-09 17:34:00,044: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[df89ad7a-e720-4691-b5dd-a0b73ca14452] succeeded in 0.00909179199970822s: None

2025-01-09 12:34:00 [2025-01-09 17:34:00,063: INFO/ForkPoolWorker-2] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[f80a2d5b-75be-475f-976f-5a1267314b8f] succeeded in 0.016104459000416682s: None

2025-01-09 12:35:00 [2025-01-09 17:35:00,017: INFO/MainProcess] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[6a6409c0-37cf-4bb3-9148-cd63ac926f60] received

2025-01-09 12:35:00 [2025-01-09 17:35:00,033: INFO/MainProcess] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[885ae309-f829-44b5-9c5c-4ba171742866] received

2025-01-09 12:35:00 [2025-01-09 17:35:00,033: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.beat_send_instant_notifications_summary_by_email[6a6409c0-37cf-4bb3-9148-cd63ac926f60] succeeded in 0.011413625000386673s: None

2025-01-09 12:35:00 [2025-01-09 17:35:00,058: INFO/ForkPoolWorker-6] Task baserow.core.notifications.tasks.singleton_send_instant_notifications_summary_by_email[885ae309-f829-44b5-9c5c-4ba171742866] succeeded in 0.023200167000140937s: None

2025-01-09 12:35:02 [2025-01-09 17:35:02,029: INFO/MainProcess] Task baserow.core.jobs.tasks.run_async_job[63cb2856-b61f-49ca-a547-fe895067c267] received

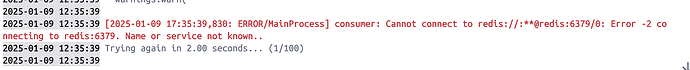

2025-01-09 12:35:07 [2025-01-09 17:35:07,043: ERROR/ForkPoolWorker-6] Task baserow.core.jobs.tasks.run_async_job[63cb2856-b61f-49ca-a547-fe895067c267] raised unexpected: DataError(‘value too long for type character varying(255)\n’)

2025-01-09 12:35:07 Traceback (most recent call last):

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/backends/utils.py”, line 105, in _execute

2025-01-09 12:35:07 return self.cursor.execute(sql, params)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 psycopg2.errors.StringDataRightTruncation: value too long for type character varying(255)

2025-01-09 12:35:07

2025-01-09 12:35:07

2025-01-09 12:35:07 The above exception was the direct cause of the following exception:

2025-01-09 12:35:07

2025-01-09 12:35:07 Traceback (most recent call last):

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/celery/app/trace.py”, line 453, in trace_task

2025-01-09 12:35:07 R = retval = fun(*args, **kwargs)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/celery/app/trace.py”, line 736, in protected_call

2025-01-09 12:35:07 return self.run(*args, **kwargs)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/core/jobs/tasks.py”, line 33, in run_async_job

2025-01-09 12:35:07 JobHandler.run(job)

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/core/jobs/handler.py”, line 73, in run

2025-01-09 12:35:07 out = job_type.run(job, progress)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/contrib/database/table/job_types.py”, line 62, in run

2025-01-09 12:35:07 new_table_clone = action_type_registry.get_by_type(DuplicateTableActionType).do(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/contrib/database/table/actions.py”, line 336, in do

2025-01-09 12:35:07 new_table_clone = TableHandler().duplicate_table(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 72, in _wrapper

2025-01-09 12:35:07 raise ex

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/core/telemetry/utils.py”, line 68, in _wrapper

2025-01-09 12:35:07 result = wrapped_func(*args, **kwargs)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/contrib/database/table/handler.py”, line 895, in duplicate_table

2025-01-09 12:35:07 imported_tables = database_type.import_tables_serialized(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/contrib/database/application_types.py”, line 518, in import_tables_serialized

2025-01-09 12:35:07 self._import_table_views(serialized_table, id_mapping, files_zip, progress)

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/contrib/database/application_types.py”, line 856, in _import_table_views

2025-01-09 12:35:07 view_type.import_serialized(table, serialized_view, id_mapping, files_zip)

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/contrib/database/views/view_types.py”, line 153, in import_serialized

2025-01-09 12:35:07 grid_view = super().import_serialized(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/backend/src/baserow/contrib/database/views/registries.py”, line 426, in import_serialized

2025-01-09 12:35:07 view_filter_object = ViewFilter.objects.create(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/manager.py”, line 87, in manager_method

2025-01-09 12:35:07 return getattr(self.get_queryset(), name)(*args, **kwargs)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/query.py”, line 679, in create

2025-01-09 12:35:07 obj.save(force_insert=True, using=self.db)

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/base.py”, line 822, in save

2025-01-09 12:35:07 self.save_base(

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/base.py”, line 909, in save_base

2025-01-09 12:35:07 updated = self._save_table(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/base.py”, line 1071, in _save_table

2025-01-09 12:35:07 results = self._do_insert(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/base.py”, line 1112, in _do_insert

2025-01-09 12:35:07 return manager._insert(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/manager.py”, line 87, in manager_method

2025-01-09 12:35:07 return getattr(self.get_queryset(), name)(*args, **kwargs)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/query.py”, line 1847, in _insert

2025-01-09 12:35:07 return query.get_compiler(using=using).execute_sql(returning_fields)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/models/sql/compiler.py”, line 1823, in execute_sql

2025-01-09 12:35:07 cursor.execute(sql, params)

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/backends/utils.py”, line 122, in execute

2025-01-09 12:35:07 return super().execute(sql, params)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/backends/utils.py”, line 79, in execute

2025-01-09 12:35:07 return self._execute_with_wrappers(

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/backends/utils.py”, line 92, in _execute_with_wrappers

2025-01-09 12:35:07 return executor(sql, params, many, context)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/backends/utils.py”, line 100, in _execute

2025-01-09 12:35:07 with self.db.wrap_database_errors:

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/utils.py”, line 91, in exit

2025-01-09 12:35:07 raise dj_exc_value.with_traceback(traceback) from exc_value

2025-01-09 12:35:07 File “/baserow/venv/lib/python3.11/site-packages/django/db/backends/utils.py”, line 105, in _execute

2025-01-09 12:35:07 return self.cursor.execute(sql, params)

2025-01-09 12:35:07 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:07 django.db.utils.DataError: value too long for type character varying(255)

2025-01-09 12:35:07

2025-01-09 12:35:39 [2025-01-09 17:35:38,005: WARNING/MainProcess] consumer: Connection to broker lost. Trying to re-establish the connection…

2025-01-09 12:35:39 Traceback (most recent call last):

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/celery/worker/consumer/consumer.py”, line 340, in start

2025-01-09 12:35:39 blueprint.start(self)

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/celery/bootsteps.py”, line 116, in start

2025-01-09 12:35:39 step.start(parent)

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/celery/worker/consumer/consumer.py”, line 746, in start

2025-01-09 12:35:39 c.loop(*c.loop_args())

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/celery/worker/loops.py”, line 97, in asynloop

2025-01-09 12:35:39 next(loop)

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/kombu/asynchronous/hub.py”, line 373, in create_loop

2025-01-09 12:35:39 cb(*cbargs)

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/kombu/transport/redis.py”, line 1344, in on_readable

2025-01-09 12:35:39 self.cycle.on_readable(fileno)

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/kombu/transport/redis.py”, line 569, in on_readable

2025-01-09 12:35:39 chan.handlerstype

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/kombu/transport/redis.py”, line 913, in _receive

2025-01-09 12:35:39 ret.append(self._receive_one(c))

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/kombu/transport/redis.py”, line 923, in _receive_one

2025-01-09 12:35:39 response = c.parse_response()

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/client.py”, line 837, in parse_response

2025-01-09 12:35:39 response = self._execute(conn, try_read)

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/client.py”, line 813, in _execute

2025-01-09 12:35:39 return conn.retry.call_with_retry(

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/retry.py”, line 49, in call_with_retry

2025-01-09 12:35:39 fail(error)

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/client.py”, line 815, in

2025-01-09 12:35:39 lambda error: self._disconnect_raise_connect(conn, error),

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/client.py”, line 802, in _disconnect_raise_connect

2025-01-09 12:35:39 raise error

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/retry.py”, line 46, in call_with_retry

2025-01-09 12:35:39 return do()

2025-01-09 12:35:39 ^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/client.py”, line 814, in

2025-01-09 12:35:39 lambda: command(*args, kwargs),

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/client.py”, line 835, in try_read

2025-01-09 12:35:39 return conn.read_response(disconnect_on_error=False, push_request=True)

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/connection.py”, line 512, in read_response

2025-01-09 12:35:39 response = self._parser.read_response(disable_decoding=disable_decoding)

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/_parsers/resp2.py”, line 15, in read_response

2025-01-09 12:35:39 result = self._read_response(disable_decoding=disable_decoding)

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/_parsers/resp2.py”, line 25, in _read_response

2025-01-09 12:35:39 raw = self._buffer.readline()

2025-01-09 12:35:39 ^^^^^^^^^^^^^^^^^^^^^^^

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/_parsers/socket.py”, line 115, in readline

2025-01-09 12:35:39 self._read_from_socket()

2025-01-09 12:35:39 File “/baserow/venv/lib/python3.11/site-packages/redis/_parsers/socket.py”, line 68, in _read_from_socket

2025-01-09 12:35:39 raise ConnectionError(SERVER_CLOSED_CONNECTION_ERROR)

2025-01-09 12:35:39 redis.exceptions.ConnectionError: Connection closed by server.

2025-01-09 12:35:39 [2025-01-09 17:35:39,775: WARNING/MainProcess] /baserow/venv/lib/python3.11/site-packages/celery/worker/consumer/consumer.py:391: CPendingDeprecationWarning:

2025-01-09 12:35:39 In Celery 5.1 we introduced an optional breaking change which

2025-01-09 12:35:39 on connection loss cancels all currently executed tasks with late acknowledgement enabled.

2025-01-09 12:35:39 These tasks cannot be acknowledged as the connection is gone, and the tasks are automatically redelivered

2025-01-09 12:35:39 back to the queue. You can enable this behavior using the worker_cancel_long_running_tasks_on_connection_loss

2025-01-09 12:35:39 setting. In Celery 5.1 it is set to False by default. The setting will be set to True by default in Celery 6.0.

2025-01-09 12:35:39

2025-01-09 12:35:39 warnings.warn(CANCEL_TASKS_BY_DEFAULT, CPendingDeprecationWarning)

2025-01-09 12:35:39

2025-01-09 12:35:39 [2025-01-09 17:35:39,820: WARNING/MainProcess] /baserow/venv/lib/python3.11/site-packages/celery/worker/consumer/consumer.py:508: CPendingDeprecationWarning: The broker_connection_retry configuration setting will no longer determine

2025-01-09 12:35:39 whether broker connection retries are made during startup in Celery 6.0 and above.

2025-01-09 12:35:39 If you wish to retain the existing behavior for retrying connections on startup,

2025-01-09 12:35:39 you should set broker_connection_retry_on_startup to True.

2025-01-09 12:35:39 warnings.warn(

2025-01-09 12:35:39

2025-01-09 12:35:39 [2025-01-09 17:35:39,830: ERROR/MainProcess] consumer: Cannot connect to redis://:@redis:6379/0: Error -2 connecting to redis:6379. Name or service not known…

2025-01-09 12:35:39 Trying again in 2.00 seconds… (1/100)