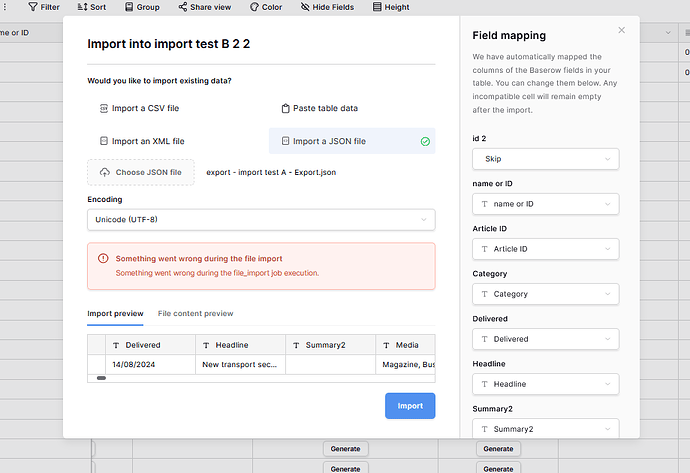

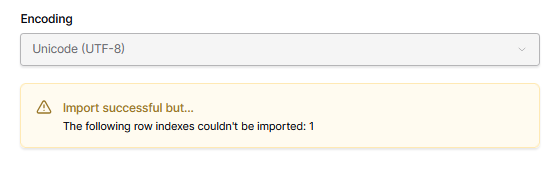

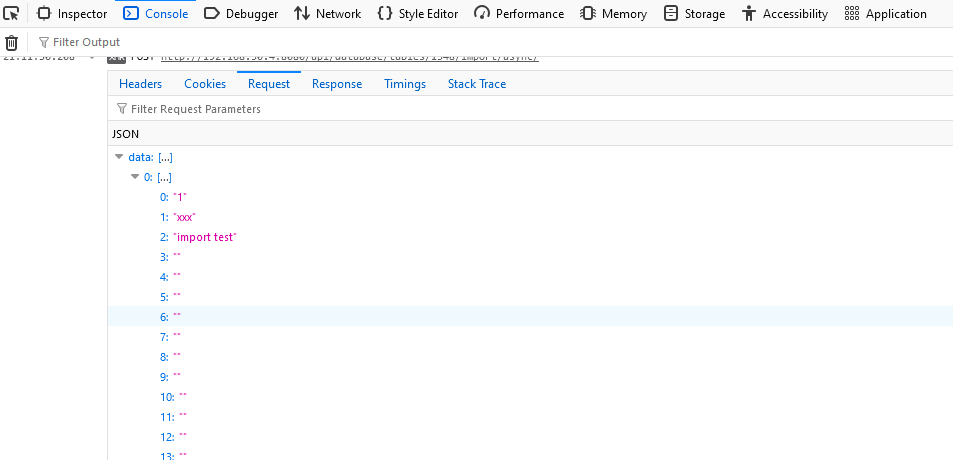

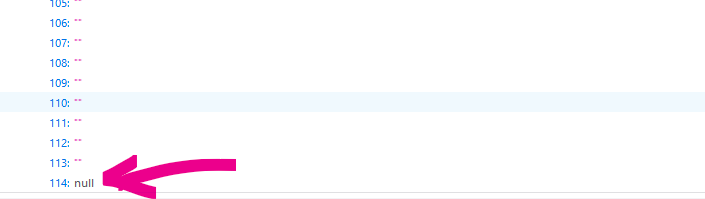

I have a really frustrating issue, where certain columns will trigger file import errors (adding row to existing tables)

this happens for larger tables with multiple column types (150+ columns).

I was not able to pinpoint exactly the type, as it seems to be a combination of a few factors.

This happens for both the standard and customised self hosted Baserow configs in 1.29.1, but it seems that some people from my team reported similar issues in prior versions.

I also get an error while trying to duplicate these “offending” columns.

deleting the column (and its duplicates if present) solves the issue. The problem is that this seems to appear a bit randomly. The only suspicion I have is that at least some of the offending columns may have been converted from AI field into text fields.

This happens for all import tymes I checked (csv, paste, json)

while looking at the logs I see this around the time the import error is displayed and then duplication attempt

[EXPORT_WORKER][2024-11-16 12:48:20] [2024-11-16 11:48:20,011: INFO/MainProcess] Task baserow.contrib.database.export.tasks.clean_up_old_jobs[220c09d3-6b6b-4e90-be92-e0be002df603] received

[EXPORT_WORKER][2024-11-16 12:48:20] [2024-11-16 11:48:20,031: WARNING/ForkPoolWorker-16] 551|2024-11-16 11:48:20.031|INFO|baserow.contrib.database.export.handler:clean_up_old_jobs:171 - Cleaning up 0 old jobs

[EXPORT_WORKER][2024-11-16 12:48:28] [2024-11-16 11:48:20,032: INFO/ForkPoolWorker-16] Task baserow.contrib.database.export.tasks.clean_up_old_jobs[220c09d3-6b6b-4e90-be92-e0be002df603] succeeded in 0.019795440999814673s: None

[BACKEND][2024-11-16 12:48:28] 127.0.0.1:56314 - “GET /api/_health/ HTTP/1.1” 200

[EXPORT_WORKER][2024-11-16 12:48:28] [2024-11-16 11:48:28,631: INFO/MainProcess] Task baserow.core.jobs.tasks.run_async_job[3cf80e93-0d95-401d-86b6-5b6b72a28c8e] received

[CELERY_WORKER][2024-11-16 12:48:28] [2024-11-16 11:43:20,027: INFO/ForkPoolWorker-16] Task baserow.contrib.database.fields.tasks.delete_mentions_marked_for_deletion[8d2cebc6-adba-434f-88ad-917aa86bc3d6] succeeded in 0.01912591999985125s: None

[EXPORT_WORKER][2024-11-16 12:48:28] [2024-11-16 11:48:28,701: WARNING/ForkPoolWorker-16] 551|2024-11-16 11:48:28.701|INFO|baserow.core.action.signals:log_action_receiver:28 - do: workspace=312 action_type=import_rows user=1

[CELERY_WORKER][2024-11-16 12:48:28] [2024-11-16 11:48:28,708: INFO/MainProcess] Task baserow.ws.tasks.broadcast_to_permitted_users[d2d2b3ef-cfcf-4527-bd38-c6bf0b76b887] received

[BACKEND][2024-11-16 12:48:29] 192.168.50.15:0 - “POST /api/database/tables/1526/import/async/ HTTP/1.1” 200

[BACKEND][2024-11-16 12:48:30] 127.0.0.1:33942 - “GET /api/_health/ HTTP/1.1” 200

[BACKEND][2024-11-16 12:48:31] 192.168.50.15:0 - “GET /api/jobs/211/ HTTP/1.1” 200

[BACKEND][2024-11-16 12:48:31] 192.168.50.15:0 - “GET /api/database/views/grid/5600/?count=true HTTP/1.1” 200

[BACKEND][2024-11-16 12:48:31] 192.168.50.15:0 - “GET /api/database/views/grid/5600/?limit=120&offset=15120&include=row_metadata HTTP/1.1” 200

[BEAT_WORKER][2024-11-16 12:48:40] [2024-11-16 11:48:20,010: INFO/MainProcess] Scheduler: Sending due task baserow.contrib.database.export.tasks.clean_up_old_jobs() (baserow.contrib.database.export.tasks.clean_up_old_jobs)

[BEAT_WORKER][2024-11-16 12:48:40] [2024-11-16 11:48:40,014: INFO/MainProcess] Scheduler: Sending due task baserow.core.action.tasks.cleanup_old_actions() (baserow.core.action.tasks.cleanup_old_actions)

[EXPORT_WORKER][2024-11-16 12:48:40] [2024-11-16 11:48:28,716: INFO/ForkPoolWorker-16] Task baserow.core.jobs.tasks.run_async_job[3cf80e93-0d95-401d-86b6-5b6b72a28c8e] succeeded in 0.08506660500006546s: None

[BEAT_WORKER][2024-11-16 12:48:40] [2024-11-16 11:48:40,015: INFO/MainProcess] Scheduler: Sending due task baserow.core.import_export.tasks.delete_marked_import_export_resources() (baserow.core.import_export.tasks.delete_marked_import_export_resources)

[EXPORT_WORKER][2024-11-16 12:48:40] [2024-11-16 11:48:40,016: INFO/MainProcess] Task baserow.core.action.tasks.cleanup_old_actions[72999844-b60a-4bb6-bb13-6336b5a2c4bb] received

[BEAT_WORKER][2024-11-16 12:48:40] [2024-11-16 11:48:40,016: INFO/MainProcess] Scheduler: Sending due task baserow.core.import_export.tasks.mark_import_export_resources_for_deletion() (baserow.core.import_export.tasks.mark_import_export_resources_for_deletion)

[BEAT_WORKER][2024-11-16 12:48:40] [2024-11-16 11:48:40,016: INFO/MainProcess] Scheduler: Sending due task baserow.core.jobs.tasks.clean_up_jobs() (baserow.core.jobs.tasks.clean_up_jobs)

########

[BACKEND][2024-11-16 16:07:10] 127.0.0.1:60450 - “GET /api/_health/ HTTP/1.1” 200

[BACKEND][2024-11-16 16:07:12] 127.0.0.1:46720 - “GET /api/_health/ HTTP/1.1” 200

[POSTGRES][2024-11-16 16:07:15] 2024-11-16 15:06:58.628 UTC [361] LOG: checkpoint starting: wal

[POSTGRES][2024-11-16 16:07:16] 2024-11-16 15:07:15.585 UTC [361] LOG: checkpoint complete: wrote 4402 buffers (26.9%); 0 WAL file(s) added, 1 removed, 32 recycled; write=15.588 s, sync=1.153 s, total=16.958 s; sync files=306, longest=0.141 s, average=0.004 s; distance=540670 kB, estimate=555044 kB

[POSTGRES][2024-11-16 16:07:16] 2024-11-16 15:07:16.427 UTC [361] LOG: checkpoints are occurring too frequently (18 seconds apart)

[POSTGRES][2024-11-16 16:07:16] 2024-11-16 15:07:16.427 UTC [361] HINT: Consider increasing the configuration parameter “max_wal_size”.

[POSTGRES][2024-11-16 16:07:31] 2024-11-16 15:07:16.427 UTC [361] LOG: checkpoint starting: wal

[POSTGRES][2024-11-16 16:07:31] 2024-11-16 15:07:31.269 UTC [361] LOG: checkpoint complete: wrote 4077 buffers (24.9%); 0 WAL file(s) added, 2 removed, 31 recycled; write=12.964 s, sync=1.659 s, total=14.842 s; sync files=306, longest=0.173 s, average=0.006 s; distance=537296 kB, estimate=553269 kB

[POSTGRES][2024-11-16 16:07:31] 2024-11-16 15:07:31.736 UTC [361] LOG: checkpoints are occurring too frequently (15 seconds apart)

[POSTGRES][2024-11-16 16:07:31] 2024-11-16 15:07:31.736 UTC [361] HINT: Consider increasing the configuration parameter “max_wal_size”.

[BACKEND][2024-11-16 16:07:32] 127.0.0.1:46728 - “GET /api/_health/ HTTP/1.1” 200

[POSTGRES][2024-11-16 16:07:37] 2024-11-16 15:07:31.736 UTC [361] LOG: checkpoint starting: wal

[POSTGRES][2024-11-16 16:07:37] 2024-11-16 15:07:37.323 UTC [13771] ERROR: canceling autovacuum task

[POSTGRES][2024-11-16 16:07:37] 2024-11-16 15:07:37.323 UTC [13771] CONTEXT: while vacuuming index “tbl_tsv_21662_idx” of relation “public.database_table_1545”

[EXPORT_WORKER][2024-11-16 16:07:42] [2024-11-16 15:07:00,047: INFO/ForkPoolWorker-16] Task