Hello,

I continue to experience problems installing Baserow on a Synology NAS.

Here is my Portainer stack:

version: '3.3'

services:

baserow:

container_name: baserow

ports:

- '9988:80'

environment:

- 'BASEROW_PUBLIC_URL=http://192.168.86.200:9988'

volumes:

- '/volume2/docker/baserow:/baserow/data'

restart: always

image: 'baserow/baserow:1.13.0'

And here are the logs:

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.jobs.tasks.clean_up_jobs

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.jobs.tasks.run_async_job

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.snapshots.tasks.delete_application_snapshot

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.snapshots.tasks.delete_expired_snapshots

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.tasks.sync_templates_task

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.trash.tasks.mark_old_trash_for_permanent_deletion

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.trash.tasks.permanently_delete_marked_trash

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.usage.tasks.run_calculate_storage

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.core.user.tasks.check_pending_account_deletion

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.ws.tasks.broadcast_to_channel_group

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.ws.tasks.broadcast_to_group

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.ws.tasks.broadcast_to_groups

[CELERY_WORKER][2022-12-13 15:14:55] . baserow.ws.tasks.broadcast_to_users

[CELERY_WORKER][2022-12-13 15:14:55] . baserow_premium.license.tasks.license_check

[CELERY_WORKER][2022-12-13 15:14:55] . djcelery_email_send_multiple

[EXPORT_WORKER][2022-12-13 15:14:55] INFO 2022-12-13 15:14:54,037 xmlschema.include_schema:1250- Resource 'XMLSchema.xsd' is already loaded

[EXPORT_WORKER][2022-12-13 15:14:55]

[EXPORT_WORKER][2022-12-13 15:14:55] -------------- export-worker@ea4ec9820bfa v5.2.3 (dawn-chorus)

[EXPORT_WORKER][2022-12-13 15:14:55] --- ***** -----

[EXPORT_WORKER][2022-12-13 15:14:55] -- ******* ---- Linux-4.4.180+-x86_64-with-glibc2.31 2022-12-13 15:14:55

[EXPORT_WORKER][2022-12-13 15:14:55] - *** --- * ---

[EXPORT_WORKER][2022-12-13 15:14:55] - ** ---------- [config]

[EXPORT_WORKER][2022-12-13 15:14:55] - ** ---------- .> app: baserow:0x7fe07158c7f0

[EXPORT_WORKER][2022-12-13 15:14:55] - ** ---------- .> transport: redis://:**@localhost:6379/0

[EXPORT_WORKER][2022-12-13 15:14:55] - ** ---------- .> results: disabled://

[EXPORT_WORKER][2022-12-13 15:14:55] - *** --- * --- .> concurrency: 1 (prefork)

[EXPORT_WORKER][2022-12-13 15:14:55] -- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

[EXPORT_WORKER][2022-12-13 15:14:55] --- ***** -----

[EXPORT_WORKER][2022-12-13 15:14:55] -------------- [queues]

[EXPORT_WORKER][2022-12-13 15:14:55] .> export exchange=export(direct) key=export

[EXPORT_WORKER][2022-12-13 15:14:55]

[EXPORT_WORKER][2022-12-13 15:14:55]

[EXPORT_WORKER][2022-12-13 15:14:55] [tasks]

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.contrib.database.export.tasks.clean_up_old_jobs

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.contrib.database.export.tasks.run_export_job

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.contrib.database.table.tasks.run_row_count_job

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.contrib.database.webhooks.tasks.call_webhook

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.action.tasks.cleanup_old_actions

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.jobs.tasks.clean_up_jobs

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.jobs.tasks.run_async_job

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.snapshots.tasks.delete_application_snapshot

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.snapshots.tasks.delete_expired_snapshots

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.tasks.sync_templates_task

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.trash.tasks.mark_old_trash_for_permanent_deletion

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.trash.tasks.permanently_delete_marked_trash

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.usage.tasks.run_calculate_storage

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.core.user.tasks.check_pending_account_deletion

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.ws.tasks.broadcast_to_channel_group

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.ws.tasks.broadcast_to_group

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.ws.tasks.broadcast_to_groups

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow.ws.tasks.broadcast_to_users

[EXPORT_WORKER][2022-12-13 15:14:55] . baserow_premium.license.tasks.license_check

[EXPORT_WORKER][2022-12-13 15:14:55] . djcelery_email_send_multiple

[CELERY_WORKER][2022-12-13 15:14:55]

[CELERY_WORKER][2022-12-13 15:14:55] [2022-12-13 15:14:55,365: INFO/MainProcess] Connected to redis://:**@localhost:6379/0

[EXPORT_WORKER][2022-12-13 15:14:55]

[EXPORT_WORKER][2022-12-13 15:14:55] [2022-12-13 15:14:55,405: INFO/MainProcess] Connected to redis://:**@localhost:6379/0

[BACKEND][2022-12-13 15:14:56] Waiting for PostgreSQL to become available attempt 1/5 ...

[BACKEND][2022-12-13 15:14:56] Error: Failed to connect to the postgresql database at localhost

[BACKEND][2022-12-13 15:14:56] Please see the error below for more details:

[BACKEND][2022-12-13 15:14:56] connection to server at "localhost" (127.0.0.1), port 5432 failed: Connection refused

[BACKEND][2022-12-13 15:14:56] Is the server running on that host and accepting TCP/IP connections?

[BACKEND][2022-12-13 15:14:56] connection to server at "localhost" (::1), port 5432 failed: Cannot assign requested address

[BACKEND][2022-12-13 15:14:56] Is the server running on that host and accepting TCP/IP connections?

[BACKEND][2022-12-13 15:14:56]

[CELERY_WORKER][2022-12-13 15:14:56] [2022-12-13 15:14:55,368: INFO/MainProcess] mingle: searching for neighbors

[CELERY_WORKER][2022-12-13 15:14:56] [2022-12-13 15:14:56,378: INFO/MainProcess] mingle: all alone

[EXPORT_WORKER][2022-12-13 15:14:56] [2022-12-13 15:14:55,408: INFO/MainProcess] mingle: searching for neighbors

[EXPORT_WORKER][2022-12-13 15:14:56] [2022-12-13 15:14:56,417: INFO/MainProcess] mingle: all alone

[BACKEND][2022-12-13 15:14:58] Waiting for PostgreSQL to become available attempt 2/5 ...

[BACKEND][2022-12-13 15:14:58] Error: Failed to connect to the postgresql database at localhost

[BACKEND][2022-12-13 15:14:58] Please see the error below for more details:

[BACKEND][2022-12-13 15:14:58] connection to server at "localhost" (127.0.0.1), port 5432 failed: Connection refused

[BACKEND][2022-12-13 15:14:58] Is the server running on that host and accepting TCP/IP connections?

[BACKEND][2022-12-13 15:14:58] connection to server at "localhost" (::1), port 5432 failed: Cannot assign requested address

[BACKEND][2022-12-13 15:14:58] Is the server running on that host and accepting TCP/IP connections?

2022-12-13 15:14:58,265 INFO spawned: 'postgresql' with pid 412

2022-12-13 15:14:58,265 INFO spawned: 'postgresql' with pid 412

[BACKEND][2022-12-13 15:14:58]

[POSTGRES][2022-12-13 15:14:58] 2022-12-13 15:14:58.322 UTC [412] FATAL: data directory "/baserow/data/postgres" has invalid permissions

[POSTGRES][2022-12-13 15:14:58] 2022-12-13 15:14:58.322 UTC [412] DETAIL: Permissions should be u=rwx (0700) or u=rwx,g=rx (0750).

2022-12-13 15:14:58,323 INFO exited: postgresql (exit status 1; not expected)

2022-12-13 15:14:58,323 INFO exited: postgresql (exit status 1; not expected)

2022-12-13 15:14:58,323 INFO gave up: postgresql entered FATAL state, too many start retries too quickly

2022-12-13 15:14:58,323 INFO gave up: postgresql entered FATAL state, too many start retries too quickly

2022-12-13 15:14:58,323 INFO reaped unknown pid 422 (exit status 0)

2022-12-13 15:14:58,323 INFO reaped unknown pid 422 (exit status 0)

Baserow was stopped or one of it's services crashed, see the logs above for more details.

2022-12-13 15:15:00,326 WARN received SIGTERM indicating exit request

2022-12-13 15:15:00,326 WARN received SIGTERM indicating exit request

2022-12-13 15:15:00,326 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

2022-12-13 15:15:00,326 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

[BACKEND][2022-12-13 15:15:00] Waiting for PostgreSQL to become available attempt 3/5 ...

[BACKEND][2022-12-13 15:15:00] Error: Failed to connect to the postgresql database at localhost

[BACKEND][2022-12-13 15:15:00] Please see the error below for more details:

[BACKEND][2022-12-13 15:15:00] connection to server at "localhost" (127.0.0.1), port 5432 failed: Connection refused

[BACKEND][2022-12-13 15:15:00] Is the server running on that host and accepting TCP/IP connections?

[BACKEND][2022-12-13 15:15:00] connection to server at "localhost" (::1), port 5432 failed: Cannot assign requested address

[BACKEND][2022-12-13 15:15:00] Is the server running on that host and accepting TCP/IP connections?

[BACKEND][2022-12-13 15:15:00]

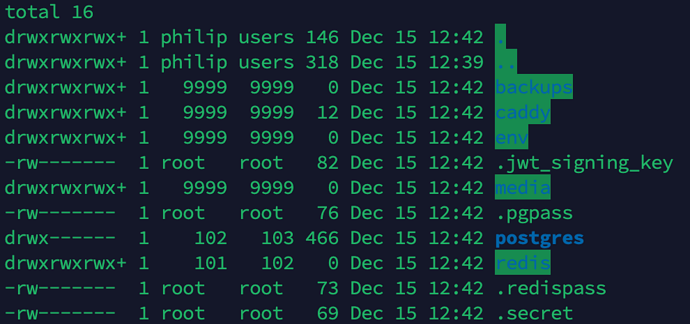

It seems clear I have a postgresql problem (but I don’t know why) and potentially a permissions problem (even though I already did chmod 777 to the entire baserow directory, including subdirectories).

Any help would be much appreciated! Thanks.