Hello,

I run the latest version of Baserow in a Docker container under Caprover, the command line is

docker run

–name=srv-captain–xxxxx-baserow.1.xxxxxxxxxxxxxxxxxxxxxxxxx

–hostname=000000000000

–env=BASEROW_PUBLIC_URL=https://xxxxxxxxxxxxx.xxxxx.xxxxxxxxxxxxx.xxx

–env=DATABASE_HOST=xxx-xxxxxxx–xxxxx-xx

–env=DATABASE_NAME=postgres

–env=DATABASE_USER=postgres

–env=DATABASE_PASSWORD=xxxxxxxxxxxxxxxx

–env=DATABASE_PORT=5432

–env=FROM_EMAIL=noreply@xxxxxxxxxxxxx.xxx

–env=EMAIL_SMTP=yes

–env=EMAIL_SMTP_HOST=smtp.mandrillapp.com

–env=EMAIL_SMTP_PORT=587

–env=EMAIL_SMTP_USER=xxxxxxxxx

–env=EMAIL_SMTP_PASSWORD=xxxxxxxxxxxxxxxxxxxxxx

–env=PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

–env=UID=9999

–env=GID=9999

–env=DOCKER_USER=baserow_docker_user

–env=DATA_DIR=/baserow/data

–env=BASEROW_PLUGIN_DIR=/baserow/data/plugins

–env=POSTGRES_VERSION=11

–env=POSTGRES_LOCATION=/etc/postgresql/11/main

–env=BASEROW_IMAGE_TYPE=all-in-one

–expose=80

–log-opt max-size=512m

–runtime=runc

–detach=true

baserow/baserow:1.14.0 start

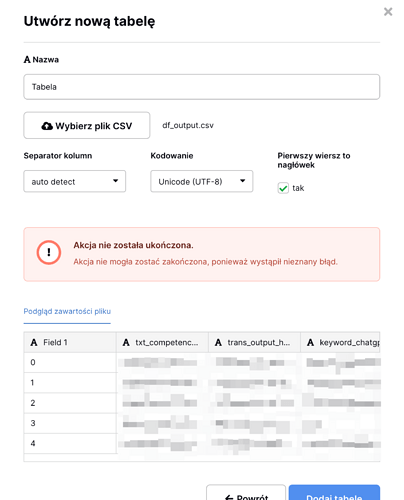

I am trying to create a new table by uploading a medium-size CSV file (25mb).

Baserow fails with the following error:

[BACKEND][2023-01-30 22:34:07] [2023-01-30 22:34:07 +0000] [336] [ERROR] Exception in ASGI application

[BACKEND][2023-01-30 22:34:07] Traceback (most recent call last):

[BACKEND][2023-01-30 22:34:07] File “/baserow/venv/lib/python3.9/site-packages/uvicorn/protocols/http/httptools_impl.py”, line 375, in run_asgi

[BACKEND][2023-01-30 22:34:07] result = await app(self.scope, self.receive, self.send)

[BACKEND][2023-01-30 22:34:07] File “/baserow/venv/lib/python3.9/site-packages/uvicorn/middleware/proxy_headers.py”, line 75, in call

[BACKEND][2023-01-30 22:34:07] return await self.app(scope, receive, send)

[BACKEND][2023-01-30 22:34:07] File “/baserow/venv/lib/python3.9/site-packages/channels/routing.py”, line 71, in call

[BACKEND][2023-01-30 22:34:07] return await application(scope, receive, send)

[BACKEND][2023-01-30 22:34:07] File “/baserow/venv/lib/python3.9/site-packages/django/core/handlers/asgi.py”, line 149, in call

[BACKEND][2023-01-30 22:34:07] body_file = await self.read_body(receive)

[BACKEND][2023-01-30 22:34:07] File “/baserow/venv/lib/python3.9/site-packages/django/core/handlers/asgi.py”, line 181, in read_body

[BACKEND][2023-01-30 22:34:07] body_file.write(message[‘body’])

[BACKEND][2023-01-30 22:34:07] File “/usr/lib/python3.9/tempfile.py”, line 894, in write

[BACKEND][2023-01-30 22:34:07] rv = file.write(s)

[BACKEND][2023-01-30 22:34:07] OSError: [Errno 28] No space left on device

The error is not accurate, there is plenty of space on the host server, as well as in the container.

In the source code I could find ‘tempfile’ usage only in ‘import_from_airtable.py’ and ‘backup_runner.py’ files, I don’t think that’s any of them.

Any ideas?