Hello @Mansur and @marcus,

I understand the concerns you both raised regarding the increase in disk usage with every update to formula fields.

Understanding the Issue:

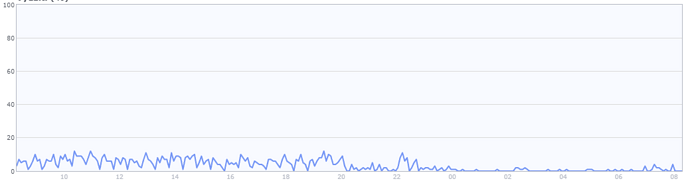

In PostgreSQL, the MVCC (Multi-Version Concurrency Control) model ensures that an UPDATE is essentially a combination of a soft delete and an insert. This means the old version of the row gets marked as obsolete, while a new version is added to the table. As a result, until the vacuum reclaims the space, the space consumption for updated columns essentially doubles.

For smaller tables (with under 10k rows), this additional space is marginal. However, for larger tables, every update can easily account for an additional 10 ~ 100MB. This continues until the vacuum process runs and frees up the aforementioned space.

Improvement:

I’ve opened a ticket regarding this issue here: link. My proposed fix aims to exclude rows from the update where the value remains unchanged after formula recalculation. This approach will be effective in scenarios like the example provided by @marcus, where the age of individuals only changes on birthdays. However, for formulas like now(), where all values change with every update, this fix won’t provide significant benefits, but I think this is ok for big tables with a lot of values updated.

How to reduce the number of updates:

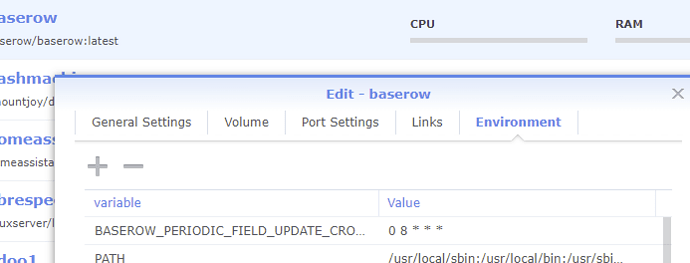

While we work towards merging the fix, I recommend reducing the frequency of updates. You can achieve this by adjusting the environment variable BASEROW_PERIODIC_FIELD_UPDATE_CRONTAB.

For instance, you can set it in the .env file or as a backend environment variable like so: BASEROW_PERIODIC_FIELD_UPDATE_CRONTAB=0 8 * * * This will ensure the periodic update runs every day at 8:00 AM.

For further customization of the crontab, please refer to crontab.guru. For guidance on setting environment variables, visit the Baserow documentation.

This could be a temporary workaround if your formulas don’t change row values several times a day, otherwise it might make sense to keep it even after the fix is merged.

Hope this helps!