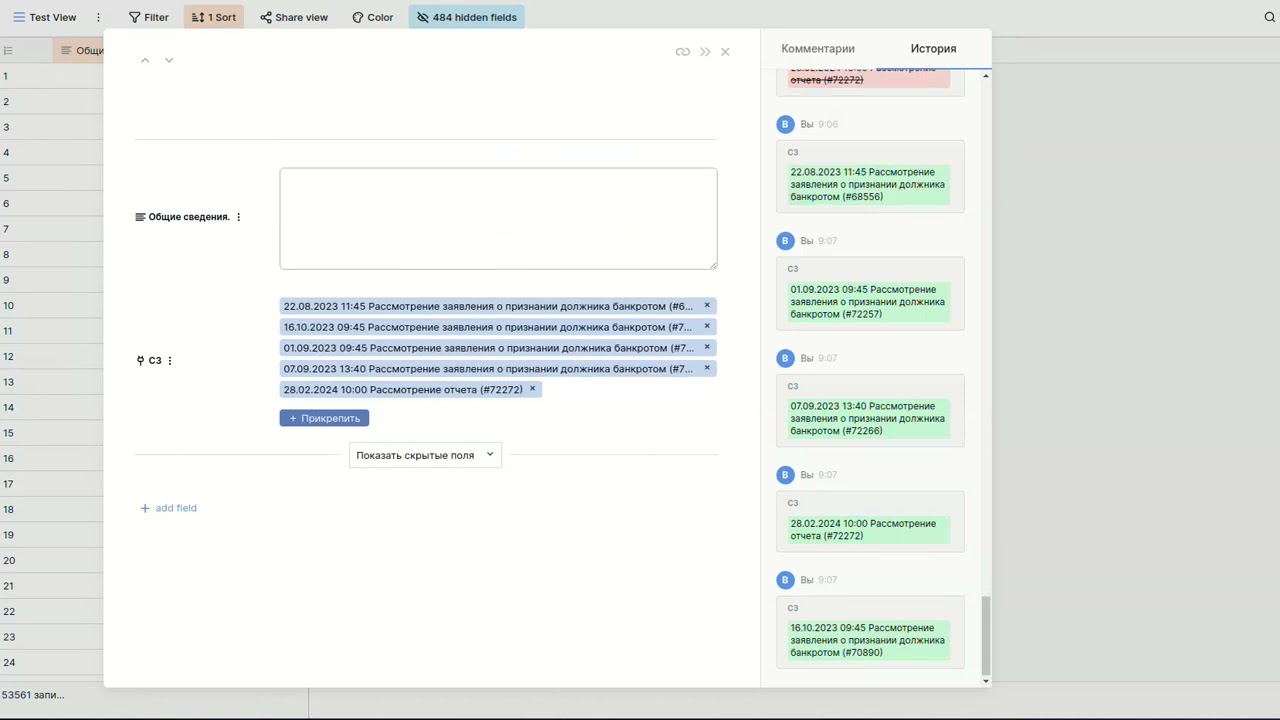

We have been observing a problem with updating records for a long time in Baserow. The number of fields and rows in the table are visible in the video. What should we do and how to solve this problem? Perhaps you can recommend some postgres settings?

Self_hosted

- Install with Docker compose and db postgres 16 or 15 installed self

- Server Config

Processos: 128 x 3.67 GHz (1 x AMD EPYC 7713)

Memory: 512 Gb

Drive: NVMe 480 GB

LAN: 1Gb - Baserow version 1.21 (October release)

.env

# An example .env file for use with only the default `docker-compose.yml`.

# Developers: Use .env.dev.example when using the `docker-compose.dev.yml` override.

#

# See https://baserow.io/docs/installation%2Fconfiguration for more details on the

# env vars.

#

# The following 3 environment variables are mandatory and must be set by you to secure

# random values. Use a command like 'tr -dc 'a-z0-9' < /dev/urandom | head -c50' to

# generate a unique value for each one.

SECRET_KEY=

DATABASE_PASSWORD=

REDIS_PASSWORD=

# To increase the security of the SECRET_KEY, you should also set

# BASEROW_JWT_SIGNING_KEY=

# The browser URL you will access Baserow with. Used to connect to the api, generate emails, etc.

BASEROW_PUBLIC_URL=http://ip

# Uncomment and set/change these if you want to use an external postgres.

DATABASE_USER=baserow

DATABASE_NAME=baserow

DATABASE_HOST=ip

DATABASE_PORT=5432

# DATABASE_URL=

# DATABASE_OPTIONS=

# Uncomment and set these if you want to use an external redis.

# REDIS_HOST=

# REDIS_PORT=

# REDIS_PROTOCOL=

# REDIS_URL=

# REDIS_USER=

# Uncomment and set these to enable Baserow to send emails.

# EMAIL_SMTP=

# EMAIL_SMTP_HOST=

# EMAIL_SMTP_PORT=

# EMAIL_SMTP_USE_TLS=

# EMAIL_SMTP_USER=

# EMAIL_SMTP_PASSWORD=

# FROM_EMAIL=

# Uncomment and set these to use AWS S3 bucket to store user files.

# See https://baserow.io/docs/installation%2Fconfiguration#user-file-upload-configuration for more

# AWS_ACCESS_KEY_ID=

# AWS_SECRET_ACCESS_KEY=

# AWS_STORAGE_BUCKET_NAME=

# AWS_S3_REGION_NAME=

# AWS_S3_ENDPOINT_URL=

# AWS_S3_CUSTOM_DOMAIN=

# Misc settings see https://baserow.io/docs/installation%2Fconfiguration for info

BASEROW_AMOUNT_OF_WORKERS=64

# BASEROW_ROW_PAGE_SIZE_LIMIT=

# BATCH_ROWS_SIZE_LIMIT=

# INITIAL_TABLE_DATA_LIMIT=

# BASEROW_FILE_UPLOAD_SIZE_LIMIT_MB=

# BASEROW_MAX_IMPORT_FILE_SIZE_MB=

# BASEROW_UNIQUE_ROW_VALUES_SIZE_LIMIT=

# BASEROW_EXTRA_ALLOWED_HOSTS=

# ADDITIONAL_APPS=

# ADDITIONAL_MODULES=

# BASEROW_ENABLE_SECURE_PROXY_SSL_HEADER=

# MIGRATE_ON_STARTUP=TRUE

# SYNC_TEMPLATES_ON_STARTUP=

# DONT_UPDATE_FORMULAS_AFTER_MIGRATION=

BASEROW_TRIGGER_SYNC_TEMPLATES_AFTER_MIGRATION=FALSE

# BASEROW_SYNC_TEMPLATES_TIME_LIMIT=

# BASEROW_BACKEND_DEBUG=

# BASEROW_BACKEND_LOG_LEVEL=

# FEATURE_FLAGS=

# PRIVATE_BACKEND_URL=

PUBLIC_BACKEND_URL=http://ip:8000

PUBLIC_WEB_FRONTEND_URL=http://ip:3000

# MEDIA_URL=

# MEDIA_ROOT=

# BASEROW_WEBHOOKS_ALLOW_PRIVATE_ADDRESS=

# BASEROW_WEBHOOKS_URL_REGEX_BLACKLIST=

# BASEROW_WEBHOOKS_IP_WHITELIST=

# BASEROW_WEBHOOKS_IP_BLACKLIST=

# BASEROW_WEBHOOKS_URL_CHECK_TIMEOUT_SECS=

# BASEROW_WEBHOOKS_MAX_CONSECUTIVE_TRIGGER_FAILURES=

# BASEROW_WEBHOOKS_MAX_RETRIES_PER_CALL=

# BASEROW_WEBHOOKS_MAX_PER_TABLE=

# BASEROW_WEBHOOKS_MAX_CALL_LOG_ENTRIES=

# BASEROW_WEBHOOKS_REQUEST_TIMEOUT_SECONDS=

# BASEROW_AIRTABLE_IMPORT_SOFT_TIME_LIMIT=

# HOURS_UNTIL_TRASH_PERMANENTLY_DELETED=

# OLD_ACTION_CLEANUP_INTERVAL_MINUTES=

# MINUTES_UNTIL_ACTION_CLEANED_UP=

# BASEROW_GROUP_STORAGE_USAGE_QUEUE=

# DISABLE_ANONYMOUS_PUBLIC_VIEW_WS_CONNECTIONS=

# BASEROW_WAIT_INSTEAD_OF_409_CONFLICT_ERROR=

# BASEROW_DISABLE_MODEL_CACHE=

# BASEROW_JOB_SOFT_TIME_LIMIT=

# BASEROW_JOB_CLEANUP_INTERVAL_MINUTES=

# BASEROW_ROW_HISTORY_CLEANUP_INTERVAL_MINUTES=

# BASEROW_ROW_HISTORY_RETENTION_DAYS=

# BASEROW_MAX_ROW_REPORT_ERROR_COUNT=

# BASEROW_JOB_EXPIRATION_TIME_LIMIT=

# BASEROW_JOBS_FRONTEND_POLLING_TIMEOUT_MS=

# BASEROW_PLUGIN_DIR=

# BASEROW_MAX_SNAPSHOTS_PER_GROUP=

# BASEROW_ENABLE_OTEL=

# BASEROW_DISABLE_PUBLIC_URL_CHECK=

# DOWNLOAD_FILE_VIA_XHR=

# BASEROW_DISABLE_GOOGLE_DOCS_FILE_PREVIEW=

# BASEROW_PLUGIN_GIT_REPOS=

# BASEROW_PLUGIN_URLS=

# BASEROW_ACCESS_TOKEN_LIFETIME_MINUTES=

# BASEROW_REFRESH_TOKEN_LIFETIME_HOURS=

# BASEROW_MAX_CONCURRENT_USER_REQUESTS=

# BASEROW_CONCURRENT_USER_REQUESTS_THROTTLE_TIMEOUT=

# BASEROW_ENTERPRISE_AUDIT_LOG_CLEANUP_INTERVAL_MINUTES=

# BASEROW_ENTERPRISE_AUDIT_LOG_RETENTION_DAYS=

# BASEROW_ALLOW_MULTIPLE_SSO_PROVIDERS_FOR_SAME_ACCOUNT=

# BASEROW_PERIODIC_FIELD_UPDATE_CRONTAB=

# BASEROW_PERIODIC_FIELD_UPDATE_QUEUE_NAME=

# Only sample 10% of requests by default

# OTEL_TRACES_SAMPLER=traceidratio

# OTEL_TRACES_SAMPLER_ARG=0.1

# Always sample the root django and celery spans

# OTEL_PER_MODULE_SAMPLER_OVERRIDES="opentelemetry.instrumentation.celery=always_on,opentelemetry.instrumentation.django=always_on"

# BASEROW_CACHALOT_ENABLED=

# BASEROW_CACHALOT_MODE=

# BASEROW_CACHALOT_ONLY_CACHABLE_TABLES=

# BASEROW_CACHALOT_UNCACHABLE_TABLES=

# BASEROW_CACHALOT_TIMEOUT=

# BASEROW_AUTO_INDEX_VIEW_ENABLED=

# BASEROW_PERSONAL_VIEW_LOWEST_ROLE_ALLOWED=

# BASEROW_DISABLE_LOCKED_MIGRATIONS=

docker-compose.yml

version: "3.4"

########################################################################################

#

# READ ME FIRST!

#

# Use the much simpler `docker-compose.all-in-one.yml` instead of this file

# unless you are an advanced user!

#

# This compose file runs every service separately using a Caddy reverse proxy to route

# requests to the backend or web-frontend, handle websockets and to serve uploaded files

# . Even if you have your own http proxy we recommend to simply forward requests to it

# as it is already properly configured for Baserow.

#

# If you wish to continue with this more advanced compose file, it is recommended that

# you set environment variables using the .env.example file by:

# 1. `cp .env.example .env`

# 2. Edit .env and fill in the first 3 variables.

# 3. Set further environment variables as you wish.

#

# More documentation can be found in:

# https://baserow.io/docs/installation%2Finstall-with-docker-compose

#

########################################################################################

# See https://baserow.io/docs/installation%2Fconfiguration for more details on these

# backend environment variables, their defaults if left blank etc.

x-backend-variables: &backend-variables

# Most users should only need to set these first four variables.

SECRET_KEY: ${SECRET_KEY:?}

BASEROW_JWT_SIGNING_KEY: ${BASEROW_JWT_SIGNING_KEY:-}

DATABASE_PASSWORD: ${DATABASE_PASSWORD:?}

REDIS_PASSWORD: ${REDIS_PASSWORD:?}

# If you manually change this line make sure you also change the duplicate line in

# the web-frontend service.

BASEROW_PUBLIC_URL: ${BASEROW_PUBLIC_URL-http://localhost}

# Set these if you want to use an external postgres instead of the db service below.

DATABASE_USER: ${DATABASE_USER:-baserow}

DATABASE_NAME: ${DATABASE_NAME:-baserow}

DATABASE_HOST:

DATABASE_PORT:

DATABASE_OPTIONS:

DATABASE_URL:

# Set these if you want to use an external redis instead of the redis service below.

REDIS_HOST:

REDIS_PORT:

REDIS_PROTOCOL:

REDIS_URL:

REDIS_USER:

# Set these to enable Baserow to send emails.

EMAIL_SMTP:

EMAIL_SMTP_HOST:

EMAIL_SMTP_PORT:

EMAIL_SMTP_USE_TLS:

EMAIL_SMTP_USE_SSL:

EMAIL_SMTP_USER:

EMAIL_SMTP_PASSWORD:

EMAIL_SMTP_SSL_CERTFILE_PATH:

EMAIL_SMTP_SSL_KEYFILE_PATH:

FROM_EMAIL:

# Set these to use AWS S3 bucket to store user files.

AWS_ACCESS_KEY_ID:

AWS_SECRET_ACCESS_KEY:

AWS_STORAGE_BUCKET_NAME:

AWS_S3_REGION_NAME:

AWS_S3_ENDPOINT_URL:

AWS_S3_CUSTOM_DOMAIN:

# Misc settings see https://baserow.io/docs/installation%2Fconfiguration for info

BASEROW_AMOUNT_OF_WORKERS: 64

BASEROW_ROW_PAGE_SIZE_LIMIT:

BATCH_ROWS_SIZE_LIMIT:

INITIAL_TABLE_DATA_LIMIT:

BASEROW_FILE_UPLOAD_SIZE_LIMIT_MB:

BASEROW_UNIQUE_ROW_VALUES_SIZE_LIMIT:

BASEROW_EXTRA_ALLOWED_HOSTS:

ADDITIONAL_APPS:

BASEROW_PLUGIN_GIT_REPOS:

BASEROW_PLUGIN_URLS:

BASEROW_ENABLE_SECURE_PROXY_SSL_HEADER:

MIGRATE_ON_STARTUP: ${MIGRATE_ON_STARTUP:-true}

SYNC_TEMPLATES_ON_STARTUP: ${SYNC_TEMPLATES_ON_STARTUP:-true}

DONT_UPDATE_FORMULAS_AFTER_MIGRATION:

BASEROW_TRIGGER_SYNC_TEMPLATES_AFTER_MIGRATION: FALSE

BASEROW_SYNC_TEMPLATES_TIME_LIMIT:

BASEROW_BACKEND_DEBUG:

BASEROW_BACKEND_LOG_LEVEL:

FEATURE_FLAGS:

BASEROW_ENABLE_OTEL:

BASEROW_DEPLOYMENT_ENV:

OTEL_EXPORTER_OTLP_ENDPOINT:

OTEL_RESOURCE_ATTRIBUTES:

POSTHOG_PROJECT_API_KEY:

POSTHOG_HOST:

PRIVATE_BACKEND_URL: http://backend:8000

PUBLIC_BACKEND_URL:

PUBLIC_WEB_FRONTEND_URL: http://ip/

MEDIA_URL:

MEDIA_ROOT:

BASEROW_AIRTABLE_IMPORT_SOFT_TIME_LIMIT:

HOURS_UNTIL_TRASH_PERMANENTLY_DELETED:

OLD_ACTION_CLEANUP_INTERVAL_MINUTES:

MINUTES_UNTIL_ACTION_CLEANED_UP:

BASEROW_GROUP_STORAGE_USAGE_QUEUE:

DISABLE_ANONYMOUS_PUBLIC_VIEW_WS_CONNECTIONS:

BASEROW_WAIT_INSTEAD_OF_409_CONFLICT_ERROR:

BASEROW_DISABLE_MODEL_CACHE:

BASEROW_PLUGIN_DIR:

BASEROW_JOB_EXPIRATION_TIME_LIMIT:

BASEROW_JOB_CLEANUP_INTERVAL_MINUTES:

BASEROW_ROW_HISTORY_CLEANUP_INTERVAL_MINUTES:

BASEROW_ROW_HISTORY_RETENTION_DAYS:

BASEROW_MAX_ROW_REPORT_ERROR_COUNT:

BASEROW_JOB_SOFT_TIME_LIMIT:

BASEROW_FRONTEND_JOBS_POLLING_TIMEOUT_MS:

BASEROW_INITIAL_CREATE_SYNC_TABLE_DATA_LIMIT:

BASEROW_MAX_SNAPSHOTS_PER_GROUP:

BASEROW_SNAPSHOT_EXPIRATION_TIME_DAYS:

BASEROW_WEBHOOKS_ALLOW_PRIVATE_ADDRESS:

BASEROW_WEBHOOKS_IP_BLACKLIST:

BASEROW_WEBHOOKS_IP_WHITELIST:

BASEROW_WEBHOOKS_URL_REGEX_BLACKLIST:

BASEROW_WEBHOOKS_URL_CHECK_TIMEOUT_SECS:

BASEROW_WEBHOOKS_MAX_CONSECUTIVE_TRIGGER_FAILURES:

BASEROW_WEBHOOKS_MAX_RETRIES_PER_CALL:

BASEROW_WEBHOOKS_MAX_PER_TABLE:

BASEROW_WEBHOOKS_MAX_CALL_LOG_ENTRIES:

BASEROW_WEBHOOKS_REQUEST_TIMEOUT_SECONDS:

BASEROW_ENTERPRISE_AUDIT_LOG_CLEANUP_INTERVAL_MINUTES:

BASEROW_ENTERPRISE_AUDIT_LOG_RETENTION_DAYS:

BASEROW_ALLOW_MULTIPLE_SSO_PROVIDERS_FOR_SAME_ACCOUNT:

BASEROW_ROW_COUNT_JOB_CRONTAB:

BASEROW_STORAGE_USAGE_JOB_CRONTAB:

BASEROW_SEAT_USAGE_JOB_CRONTAB:

BASEROW_PERIODIC_FIELD_UPDATE_CRONTAB:

BASEROW_PERIODIC_FIELD_UPDATE_TIMEOUT_MINUTES:

BASEROW_PERIODIC_FIELD_UPDATE_QUEUE_NAME:

BASEROW_MAX_CONCURRENT_USER_REQUESTS:

BASEROW_CONCURRENT_USER_REQUESTS_THROTTLE_TIMEOUT:

BASEROW_OSS_ONLY:

OTEL_TRACES_SAMPLER:

OTEL_TRACES_SAMPLER_ARG:

OTEL_PER_MODULE_SAMPLER_OVERRIDES:

BASEROW_CACHALOT_ENABLED:

BASEROW_CACHALOT_MODE:

BASEROW_CACHALOT_ONLY_CACHABLE_TABLES:

BASEROW_CACHALOT_UNCACHABLE_TABLES:

BASEROW_CACHALOT_TIMEOUT:

BASEROW_AUTO_INDEX_VIEW_ENABLED:

BASEROW_PERSONAL_VIEW_LOWEST_ROLE_ALLOWED:

BASEROW_DISABLE_LOCKED_MIGRATIONS:

BASEROW_USE_PG_FULLTEXT_SEARCH: FALSE

BASEROW_AUTO_VACUUM:

BASEROW_BUILDER_DOMAINS:

services:

# A caddy reverse proxy sitting in-front of all the services. Responsible for routing

# requests to either the backend or web-frontend and also serving user uploaded files

# from the media volume.

caddy:

image: caddy:2

restart: unless-stopped

environment:

# Controls what port the Caddy server binds to inside its container.

BASEROW_CADDY_ADDRESSES: ${BASEROW_CADDY_ADDRESSES:-:80}

PRIVATE_WEB_FRONTEND_URL: ${PRIVATE_WEB_FRONTEND_URL:-http://web-frontend:3000}

PRIVATE_BACKEND_URL: ${PRIVATE_BACKEND_URL:-http://backend:8000}

ports:

- "${HOST_PUBLISH_IP:-0.0.0.0}:${WEB_FRONTEND_PORT:-80}:80"

- "${HOST_PUBLISH_IP:-0.0.0.0}:${WEB_FRONTEND_SSL_PORT:-443}:443"

volumes:

- $PWD/Caddyfile:/etc/caddy/Caddyfile

- media:/baserow/media

- caddy_config:/config

- caddy_data:/data

networks:

local:

backend:

image: baserow_backend:latest

build:

dockerfile: ./backend/Dockerfile

context: .

restart: unless-stopped

environment:

<<: *backend-variables

depends_on:

- redis

volumes:

- media:/baserow/media

networks:

local:

web-frontend:

image: baserow_web-frontend:latest

build:

dockerfile: ./web-frontend/Dockerfile

context: .

restart: unless-stopped

environment:

BASEROW_PUBLIC_URL: ${BASEROW_PUBLIC_URL-http://localhost}

PRIVATE_BACKEND_URL: ${PRIVATE_BACKEND_URL:-http://backend:8000}

PUBLIC_BACKEND_URL:

PUBLIC_WEB_FRONTEND_URL:

BASEROW_DISABLE_PUBLIC_URL_CHECK:

INITIAL_TABLE_DATA_LIMIT:

DOWNLOAD_FILE_VIA_XHR:

BASEROW_DISABLE_GOOGLE_DOCS_FILE_PREVIEW:

HOURS_UNTIL_TRASH_PERMANENTLY_DELETED:

DISABLE_ANONYMOUS_PUBLIC_VIEW_WS_CONNECTIONS:

FEATURE_FLAGS:

ADDITIONAL_MODULES:

BASEROW_MAX_IMPORT_FILE_SIZE_MB:

BASEROW_MAX_SNAPSHOTS_PER_GROUP:

BASEROW_ENABLE_OTEL:

BASEROW_DEPLOYMENT_ENV:

BASEROW_OSS_ONLY:

BASEROW_USE_PG_FULLTEXT_SEARCH: FALSE

POSTHOG_PROJECT_API_KEY:

POSTHOG_HOST:

BASEROW_UNIQUE_ROW_VALUES_SIZE_LIMIT:

BASEROW_ROW_PAGE_SIZE_LIMIT:

BASEROW_BUILDER_DOMAINS:

depends_on:

- backend

networks:

local:

celery:

image: baserow/backend:1.20.2

restart: unless-stopped

environment:

<<: *backend-variables

command: celery-worker

# The backend image's baked in healthcheck defaults to the django healthcheck

# override it to the celery one here.

healthcheck:

test: [ "CMD-SHELL", "/baserow/backend/docker/docker-entrypoint.sh celery-worker-healthcheck" ]

depends_on:

- backend

volumes:

- media:/baserow/media

networks:

local:

celery-export-worker:

image: baserow/backend:1.20.2

restart: unless-stopped

command: celery-exportworker

environment:

<<: *backend-variables

# The backend image's baked in healthcheck defaults to the django healthcheck

# override it to the celery one here.

healthcheck:

test: [ "CMD-SHELL", "/baserow/backend/docker/docker-entrypoint.sh celery-exportworker-healthcheck" ]

depends_on:

- backend

volumes:

- media:/baserow/media

networks:

local:

celery-beat-worker:

image: baserow/backend:1.20.2

restart: unless-stopped

command: celery-beat

environment:

<<: *backend-variables

# See https://github.com/sibson/redbeat/issues/129#issuecomment-1057478237

stop_signal: SIGQUIT

depends_on:

- backend

volumes:

- media:/baserow/media

networks:

local:

redis:

image: redis:6

command: redis-server --requirepass ${REDIS_PASSWORD:?}

healthcheck:

test: [ "CMD", "redis-cli", "ping" ]

networks:

local:

# By default, the media volume will be owned by root on startup. Ensure it is owned by

# the same user that django is running as, so it can write user files.

volume-permissions-fixer:

image: bash:4.4

command: chown 9999:9999 -R /baserow/media

volumes:

- media:/baserow/media

networks:

local:

volumes:

pgdata:

media:

caddy_data:

caddy_config:

networks:

local:

driver: bridge

Video withs problems: