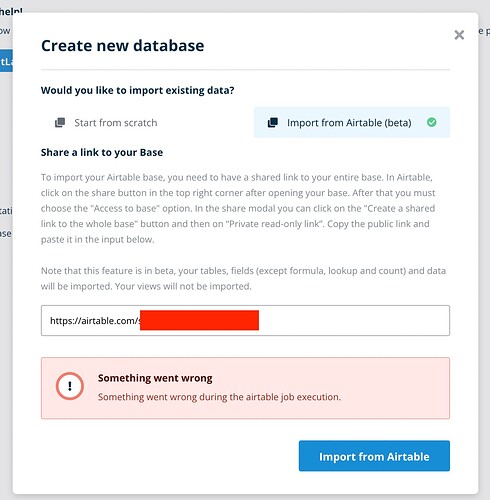

I cant import base from AirTable

in 42% importing this error:

Same base success imported in baserow.io host version.

There is docker-compose logs:

celery-export-worker_1 | [2022-12-25 15:51:32,449: ERROR/ForkPoolWorker-1] Task baserow.core.jobs.tasks.run_async_job[f9225aa8-b89a-42a7-b3e9-493197e26c43] raised unexpected: KeyError(43)

celery-export-worker_1 | Traceback (most recent call last):

celery-export-worker_1 | File “/baserow/venv/lib/python3.9/site-packages/celery/app/trace.py”, line 451, in trace_task

celery-export-worker_1 | R = retval = fun(*args, **kwargs)

celery-export-worker_1 | File “/baserow/venv/lib/python3.9/site-packages/celery/app/trace.py”, line 734, in protected_call

celery-export-worker_1 | return self.run(*args, **kwargs)

celery-export-worker_1 | File “/baserow/backend/src/baserow/core/jobs/tasks.py”, line 73, in run_async_job

celery-export-worker_1 | raise e

celery-export-worker_1 | File “/baserow/backend/src/baserow/core/jobs/tasks.py”, line 36, in run_async_job

celery-export-worker_1 | JobHandler().run(job)

celery-export-worker_1 | File “/baserow/backend/src/baserow/core/jobs/handler.py”, line 62, in run

celery-export-worker_1 | return job_type.run(job, progress)

celery-export-worker_1 | File “/baserow/backend/src/baserow/contrib/database/airtable/job_type.py”, line 118, in run

celery-export-worker_1 | databases, id_mapping = AirtableHandler.import_from_airtable_to_group(

celery-export-worker_1 | File “/baserow/backend/src/baserow/contrib/database/airtable/handler.py”, line 609, in import_from_airtable_to_group

celery-export-worker_1 | databases, id_mapping = CoreHandler().import_applications_to_group(

celery-export-worker_1 | File “/baserow/backend/src/baserow/core/handler.py”, line 1344, in import_applications_to_group

celery-export-worker_1 | imported_application = application_type.import_serialized(

celery-export-worker_1 | File “/baserow/backend/src/baserow/contrib/database/application_types.py”, line 445, in import_serialized

celery-export-worker_1 | self.import_tables_serialized(

celery-export-worker_1 | File “/baserow/backend/src/baserow/contrib/database/application_types.py”, line 289, in import_tables_serialized

celery-export-worker_1 | view_type.import_serialized(

celery-export-worker_1 | File “/baserow/backend/src/baserow/contrib/database/views/view_types.py”, line 131, in import_serialized

celery-export-worker_1 | grid_view = super().import_serialized(

celery-export-worker_1 | File “/baserow/backend/src/baserow/contrib/database/views/registries.py”, line 346, in import_serialized

celery-export-worker_1 | value_provider_type.set_import_serialized_value(

celery-export-worker_1 | File “/baserow/premium/backend/src/baserow_premium/views/decorator_value_provider_types.py”, line 127, in set_import_serialized_value

celery-export-worker_1 | new_field_id = id_mapping[“database_fields”][filter[“field”]]

celery-export-worker_1 | KeyError: 43