Having issues with self-hosted Baserow database, when performing table export.

I have an S3 setup, with files stored on backblaze s3 storage - this works fine (upload/download etc…), but when I try to export a specific table, it creates a file on S3, but gives a wrong link to download. This is the S3 response:

<Error>

<Code>InvalidRequest</Code>

<Message>The server cannot or will not process the request due to something that is perceived to be a client error eg malformed request syntax invalid request message framing or deceptive request routing</Message>

</Error>

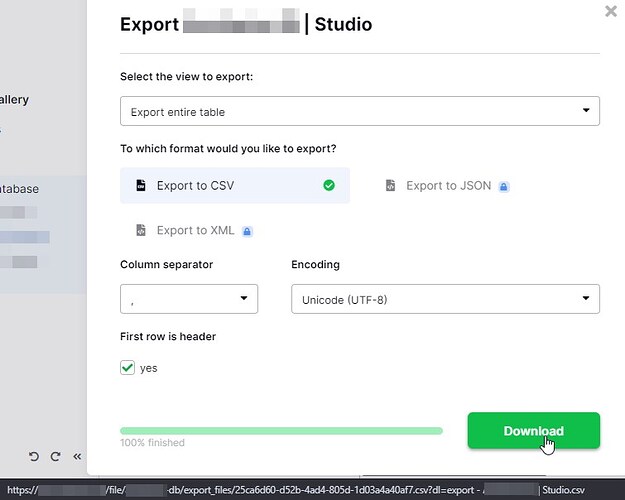

Download link:

https://S3.DOMAIN.COM/file/a-db/export_files/2e0d7c95-b3a5-45ae-a042-cc5fa852d58f.csv?dl=export%20-%20Mysite.com%20|%20Studio.csv

If I adjust the link manually to (removing the query string):

https://S3.DOMAIN.COM/file/a-db/export_files/2e0d7c95-b3a5-45ae-a042-cc5fa852d58f.csv

I can download the file normally…

Thoughts:

It looks like a bug. It could be related to table name in the query, that is being rejected by S3 storage.

Some of my other tables can be exported without issue and without removing the query string. So it might depend on the characters that are being passed to URI query.

Possible solution:

Remove query string from download export link, when S3 is set. Give plain link to file.

Notes:

Everything else works fine, no logged errors.

Installed baserow using official guide

Additionally have setup external PostgreSQL

Additionally have setup external S3 storage (aws)

Installation:

Baserow Version: 1.20.2 (Docker)

PostgreSQL: v16.1

Docker version: v24.0.7

System:

Ubuntu 20.04.6 LTS (4 Cores, 32Gb ram, 256 SSD)

S3 Provider: Backblaze