Supposedly being lazy is a positive quality for programmers. That’s convenient because I’m the laziest of them all. My daughter’s school wanted us to keep a reading log, the names of the books she reads, and for how long. This record keeping activity turned out to be more of a hassle than expected, I’d rather spend the time reading and teaching my daughters rather than doing admin work.

My solution: send an audio recording to a telegram bot, and the reading activity is recorded automatically.

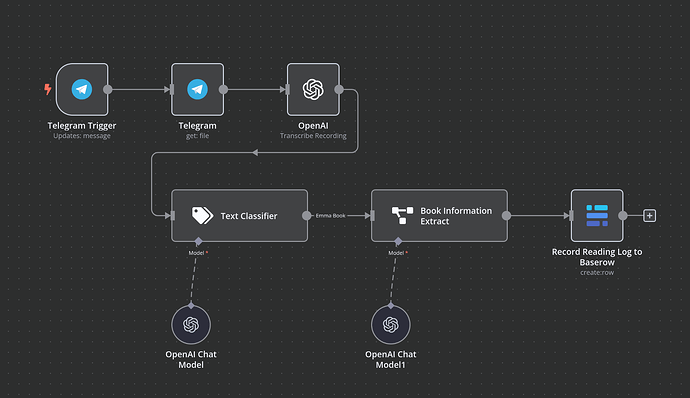

Here’s the workflow in n8n. Everything starts with the telegram message (and audio), and ends with a new record in a Baserow table. I’ll go over it in detail below.

First, the objectives of the project

I wanted a no-code or low-code solution which allows me to go from audio message to database record. Writing code is not a problem for me, but having to deploy code in production (for example as a telegram bot running in a docker container on a VPS) is a bit of a hassle for this one-off project. Hosting this on an automation platform is easier and n8n is a good fit.

Step 1: The audio message

The first step is an audio message. It’s quick and easy to record, similar to a WhatsApp audio message (push and hold to record), but implemented using a Telegram bot. There are a few reasons why I chose telegram, first their API and interoperability features are better than other chat platforms. The UI on android is similar to WhatsApp. I can record a message quickly from my smartphone. Alternatively, you could setup a web frontend using streamlit which has a voice recording plugin, but you’d have to host it yourself.

On n8n, you have to add the message trigger, and then also download the file if it’s a voice message, that’s what the second node is for.

If you’re implementing this in code, you need to use the telegram bot API, possibly in Python. It’s a bit of a hassle because it uses async interfaces, but you could also use webhooks, where you could handle the events like a normal REST API call.

Step 2: Transcribe audio to text

In order to interpret the message, we have to transcribe the audio. For this, n8n offers OpenAI Whisper and AWS Transcribe. In this workflow I used all OpenAI engines for AI parts, but others will work equally well.

When using code, you would simply call the OpenAI whisper Speech to Text API. You could also call Azure text to speech which works equally, well, but you have to supply the source language on Azure.

Step 3: Text classifier

This step took me a while to get right. I thought I had to use the AI Agent node, but my problem was simpler: I wanted to know whether the audio message was related to books or reading log. And if so, I wanted to pass on processing to a specific branch. That’s because I’d like to use this telegram bot and this workflow for other use cases (for example, add something to shopping list, or add event to calendar, things like that).

I initially looked at the AI Agent, but you probably don’t need it unless you’re trying to deal with an interaction which involves multiple messages and tool calling. The text classifier node will simply classify the text in the categories you define. I have a single category, “Emma Book Reading Log” and the model is able to detect this very well.

When writing this in code, you could call the OpenAI API and use tool calling which would try to select the right situation. A lot of other LLMs support tool calling (Anthropic, Mistral).

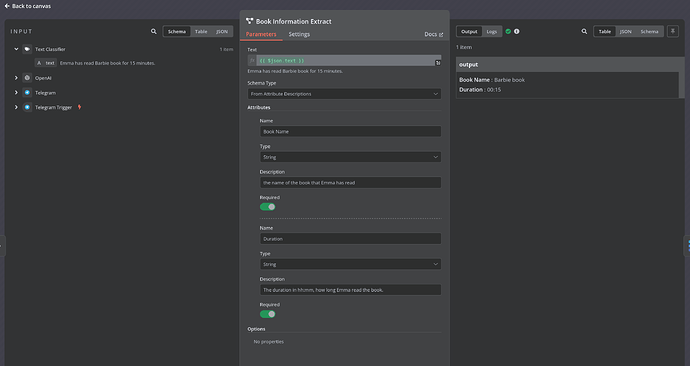

Step 4: Extract book information

Here, we go from unstructured text data (on the left) to structured data (right)

Since the goal is to record book names in a database, we have to go from the unstructured transcribed audio (“Emma has read my little pony book for 12 minutes”) to structured data like book: “my little pony”, duration: 12. This is the role of the Information Extractor node on n8n. Then it passes the structured output to the next node.

In code, you could probably use JSON mode in OpenAI, or probably tool calling. When using OpenAI tool / function call mode, you could probably combine step 3 and 4 into one which directly calls the tool with book and duration (in practice, OpenAI will tell you “call the tool with these parameters”, and you will have to execute that call which ultimately inserts to the database table).

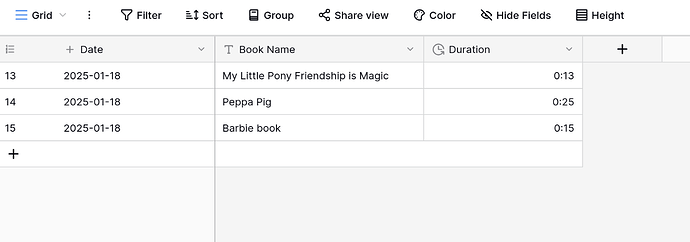

Step 5: Create database record

Records are added in Baserow for each book

Finally, the record is inserted in the database. My online database of choice is Baserow. It’s an online collaborative database with a friendly UI and very good API. It’s also open-source, you can self-host it.

Speaking of self-hosting, n8n can be self-hosted, telegram bots also, and so can baserow. If you want a 100% self-hosted solution, you also need to add the AI models, and for that you’ll probably need to run Ollama on your PC and buy an pretty decent GPU, but it’s possible.

The end ! If you find this interesting, and have use cases of your own, I’d love to discuss. You could easily extend this to add items to your shopping list for example, or make notes for yourself.

Edit: after putting this together, i found an template which is very similar: Angie, Personal AI Assistant with Telegram Voice and Text | n8n workflow template , there’s a youtube video. They use the AI agent (tool calling) which is a bit different but works similarly.