Hi @joffcom I also suspect that there is a problem with the backend. When I try to call the api with CURL or postman, I always fail (the error returned is: Connection reset by peer).

I found two phenomena:

1、if I restart the backend with supervisor, the backend will run normally, access to all api is normal. But the response of the web-frontend will gradually slow down, perhaps because the speed of the back end processing the request is slow? About two days after normal operation, this error will occur again (502 error)

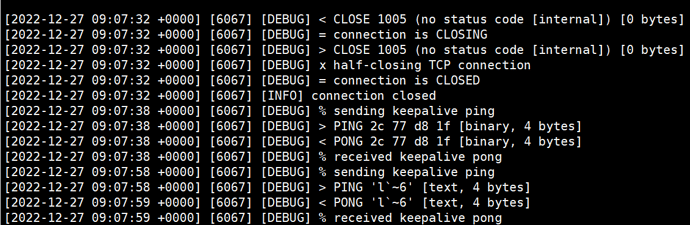

2、Every time a 502 error occurs in the API, the websocket connection will be closed, and the log will display an error code of 1005.

Only nginx and backend logs contain some information. I haven’t found others

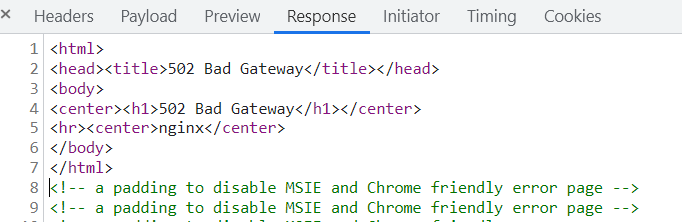

nginx log:

2022/12/27 10:53:01 [error] 24916#0: *1932046 recv() failed (104: Connection reset by peer) while reading response header from upstream, client: 192.111.14.226, server: localhost, request: "GET /api/settings/sysconfig/ HTTP/1.1", upstream: "http://192.111.14.241:8000/api/settings/sysconfig/", host: "baserow.com", referrer: "https://baserow.com/dashboard"

2022/12/27 10:53:01 [warn] 24916#0: *1932046 upstream server temporarily disabled while reading response header from upstream, client: 192.111.14.226, server: localhost, request: "GET /api/settings/sysconfig/ HTTP/1.1", upstream: "http://192.111.14.241:8000/api/settings/sysconfig/", host: "baserow.com", referrer: "https://baserow.com/dashboard"

backend.error:

[2022-12-02 12:54:03 +0000] [11433] [DEBUG] % sending keepalive ping

[2022-12-02 12:54:03 +0000] [11433] [DEBUG] > PING 15 d4 cc 68 [binary, 4 bytes]

[2022-12-02 12:54:03 +0000] [11433] [DEBUG] < PONG 15 d4 cc 68 [binary, 4 bytes]

[2022-12-02 12:54:03 +0000] [11433] [DEBUG] % received keepalive pong

[2022-12-02 12:54:10 +0000] [11433] [DEBUG] < CLOSE 1005 (no status code [internal]) [0 bytes]

[2022-12-02 12:54:10 +0000] [11433] [DEBUG] = connection is CLOSING

[2022-12-02 12:54:10 +0000] [11433] [DEBUG] > CLOSE 1005 (no status code [internal]) [0 bytes]

[2022-12-02 12:54:10 +0000] [11433] [DEBUG] x half-closing TCP connection

[2022-12-02 12:54:10 +0000] [11433] [DEBUG] = connection is CLOSED

[2022-12-02 12:54:10 +0000] [11433] [INFO] connection closed

[2022-12-02 13:02:22 +0000] [11433] [DEBUG] = connection is CONNECTING

[2022-12-02 13:02:22 +0000] [11433] [DEBUG] < GET /ws/core/?jwtxxxxxxxxxx

[2022-12-02 12:53:43 +0000] [11433] [DEBUG] > Connection: Upgrade

[2022-12-02 12:53:43 +0000] [11433] [DEBUG] > Sec-WebSocket-Accept: NspQe/v9I0tP/KY0M=

[2022-12-02 12:53:43 +0000] [11433] [DEBUG] > Sec-WebSocket-Extensions: permessage-deflate

[2022-12-02 12:53:43 +0000] [11433] [DEBUG] > Date: Fri, 02 Dec 2022 12:53:43 GMT

[2022-12-02 12:53:43 +0000] [11433] [DEBUG] > Server: Python/3.8 websockets/10.3

[2022-12-02 12:53:43 +0000] [11433] [INFO] connection open

[2022-12-02 12:53:43 +0000] [11433] [DEBUG] = connection is OPEN

[2022-12-02 12:53:43 +0000] [11433] [DEBUG] > TEXT '{"type": "authentication", "success": true, "we...e54-a31e-xxxxxx37"}' [100 bytes]

[2022-12-02 12:54:03 +0000] [11433] [DEBUG] % sending keepalive ping

[2022-12-02 12:54:03 +0000] [11433] [DEBUG] > PING 15 d4 cc 68 [binary, 4 bytes]

[2022-12-02 12:54:03 +0000] [11433] [DEBUG] < PONG 15 d4 cc 68 [binary, 4 bytes]

[2022-12-02 12:54:03 +0000] [11433] [DEBUG] % received keepalive pong

I too had ‘Connection reset by peer’ errors in a Django application at my company when it had DEBUG = True mode. I think it has to do with the fact that the connection is closed before completing the request or something like that.

I too had ‘Connection reset by peer’ errors in a Django application at my company when it had DEBUG = True mode. I think it has to do with the fact that the connection is closed before completing the request or something like that.