So I was finally able to upgrade it to 1.24.2 , leaving postgres at 11

by just modifying the version in docker-compose.yml instead of downloading it from the website.

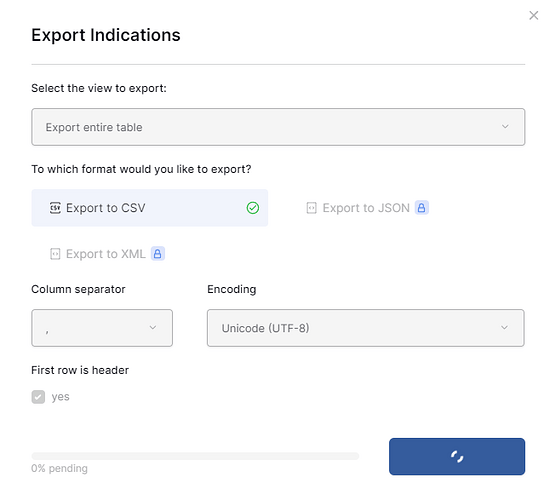

I exported data

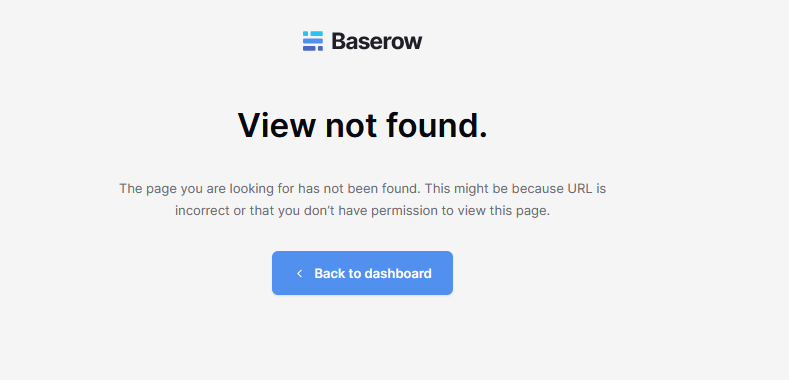

Restored it on new untouched virtual machine, looks like the table names are there but if I click on any it gives me an error.

Backup

docker compose run -v ~/baserow_backups:/baserow/backups backend backup -f /baserow/backups/baserow_backup.tar.gz

Restore

docker compose run -v ~/baserow_backups:/baserow/backups baserow backend-cmd-with-db restore -f /baserow/backups/baserow_backup.tar.gz

Docs

I followed this guidance but its needs more examples on how exactly to restore.

There was not enough information, all the way at the bottom.

https://baserow.io/docs/installation%2Finstall-with-docker-compose

Logs

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:24:45.908 UTC [437] baserow@baserow STATEMENT: SELECT “database_table_468”.“id”, “database_table_468”.“created_on”, “database_table_468”.“updated_on”, “database_table_468”.“trashed”, “database_table_468”.“field_4235”, “database_table_468”.“field_4236”, “database_table_468”.“field_4238”, “database_table_468”.“order”, “database_table_468”.“needs_background_update”, “database_table_468”.“created_by_id”, “database_table_468”.“last_modified_by_id” FROM “database_table_468” WHERE NOT “database_table_468”.“trashed” ORDER BY “database_table_468”.“field_4235” COLLATE “en-x-icu” ASC NULLS FIRST, “database_table_468”.“order” ASC NULLS FIRST, “database_table_468”.“id” ASC NULLS FIRST LIMIT 21

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.801 UTC [561] baserow@baserow ERROR: relation “database_table_557” does not exist at character 35

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.801 UTC [561] baserow@baserow STATEMENT: SELECT COUNT() AS “__count” FROM “database_table_557” WHERE NOT “database_table_557”.“trashed”

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.837 UTC [563] baserow@baserow ERROR: relation “database_table_557” does not exist at character 406

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.837 UTC [563] baserow@baserow STATEMENT: SELECT “database_table_557”.“id”, “database_table_557”.“created_on”, “database_table_557”.“updated_on”, “database_table_557”.“trashed”, “database_table_557”.“field_5091”, “database_table_557”.“field_5092”, “database_table_557”.“field_5097”, “database_table_557”.“order”, “database_table_557”.“needs_background_update”, “database_table_557”.“created_by_id”, “database_table_557”.“last_modified_by_id” FROM “database_table_557” WHERE NOT “database_table_557”.“trashed” ORDER BY “database_table_557”.“order” ASC NULLS FIRST, “database_table_557”.“id” ASC NULLS FIRST LIMIT 21

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.838 UTC [563] baserow@baserow ERROR: relation “database_table_557” does not exist at character 406

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.838 UTC [563] baserow@baserow STATEMENT: SELECT “database_table_557”.“id”, “database_table_557”.“created_on”, “database_table_557”.“updated_on”, “database_table_557”.“trashed”, “database_table_557”.“field_5091”, “database_table_557”.“field_5092”, “database_table_557”.“field_5097”, “database_table_557”.“order”, “database_table_557”.“needs_background_update”, “database_table_557”.“created_by_id”, “database_table_557”.“last_modified_by_id” FROM “database_table_557” WHERE NOT “database_table_557”.“trashed” ORDER BY “database_table_557”.“order” ASC NULLS FIRST, “database_table_557”.“id” ASC NULLS FIRST LIMIT 21

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.838 UTC [563] baserow@baserow ERROR: relation “database_table_557” does not exist at character 406

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.838 UTC [563] baserow@baserow STATEMENT: SELECT “database_table_557”.“id”, “database_table_557”.“created_on”, “database_table_557”.“updated_on”, “database_table_557”.“trashed”, “database_table_557”.“field_5091”, “database_table_557”.“field_5092”, “database_table_557”.“field_5097”, “database_table_557”.“order”, “database_table_557”.“needs_background_update”, “database_table_557”.“created_by_id”, “database_table_557”.“last_modified_by_id” FROM “database_table_557” WHERE NOT “database_table_557”.“trashed” ORDER BY “database_table_557”.“order” ASC NULLS FIRST, “database_table_557”.“id” ASC NULLS FIRST LIMIT 21

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.839 UTC [563] baserow@baserow ERROR: relation “database_table_557” does not exist at character 406

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.839 UTC [563] baserow@baserow STATEMENT: SELECT “database_table_557”.“id”, “database_table_557”.“created_on”, “database_table_557”.“updated_on”, “database_table_557”.“trashed”, “database_table_557”.“field_5091”, “database_table_557”.“field_5092”, “database_table_557”.“field_5097”, “database_table_557”.“order”, “database_table_557”.“needs_background_update”, “database_table_557”.“created_by_id”, “database_table_557”.“last_modified_by_id” FROM “database_table_557” WHERE NOT “database_table_557”.“trashed” ORDER BY “database_table_557”.“order” ASC NULLS FIRST, “database_table_557”.“id” ASC NULLS FIRST LIMIT 21

[POSTGRES][2024-04-20 23:26:31] 2024-04-20 23:26:31.840 UTC [563] baserow@baserow ERROR: relation “database_table_557” does not exist at character 406

[BACKEND][2024-04-20 23:26:31] 10.21.0.195:0 - “GET /api/database/views/table/557/?include=filters,sortings,group_bys,decorations HTTP/1.1” 200

[BACKEND][2024-04-20 23:26:31] ERROR 2024-04-20 23:26:31,808 django.request.log_response:241- Internal Server Error: /api/database/views/grid/2273/

[BACKEND][2024-04-20 23:26:31] Traceback (most recent call last):

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/backends/utils.py”, line 89, in _execute

[BACKEND][2024-04-20 23:26:31] return self.cursor.execute(sql, params)

[BACKEND][2024-04-20 23:26:31] psycopg2.errors.UndefinedTable: relation “database_table_557” does not exist

[BACKEND][2024-04-20 23:26:31] LINE 1: SELECT COUNT() AS “__count” FROM “database_table_557” WHERE…

[BACKEND][2024-04-20 23:26:31] ^

[BACKEND][2024-04-20 23:26:31]

[BACKEND][2024-04-20 23:26:31]

[BACKEND][2024-04-20 23:26:31] The above exception was the direct cause of the following exception:

[BACKEND][2024-04-20 23:26:31]

[BACKEND][2024-04-20 23:26:31] Traceback (most recent call last):

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/asgiref/sync.py”, line 486, in thread_handler

[BACKEND][2024-04-20 23:26:31] raise exc_info[1]

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/core/handlers/exception.py”, line 43, in inner

[BACKEND][2024-04-20 23:26:31] response = await get_response(request)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/core/handlers/base.py”, line 253, in _get_response_async

[BACKEND][2024-04-20 23:26:31] response = await wrapped_callback(

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/asgiref/sync.py”, line 448, in call

[BACKEND][2024-04-20 23:26:31] ret = await asyncio.wait_for(future, timeout=None)

[BACKEND][2024-04-20 23:26:31] File “/usr/lib/python3.9/asyncio/tasks.py”, line 442, in wait_for

[BACKEND][2024-04-20 23:26:31] return await fut

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/asgiref/current_thread_executor.py”, line 22, in run

[BACKEND][2024-04-20 23:26:31] result = self.fn(*self.args, **self.kwargs)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/asgiref/sync.py”, line 490, in thread_handler

[BACKEND][2024-04-20 23:26:31] return func(*args, **kwargs)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/views/decorators/csrf.py”, line 55, in wrapped_view

[BACKEND][2024-04-20 23:26:31] return view_func(*args, **kwargs)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/views/generic/base.py”, line 103, in view

[BACKEND][2024-04-20 23:26:31] return self.dispatch(request, *args, **kwargs)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/rest_framework/views.py”, line 509, in dispatch

[BACKEND][2024-04-20 23:26:31] response = self.handle_exception(exc)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/rest_framework/views.py”, line 469, in handle_exception

[BACKEND][2024-04-20 23:26:31] self.raise_uncaught_exception(exc)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/rest_framework/views.py”, line 480, in raise_uncaught_exception

[BACKEND][2024-04-20 23:26:31] raise exc

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/rest_framework/views.py”, line 506, in dispatch

[BACKEND][2024-04-20 23:26:31] response = handler(request, *args, **kwargs)

[BACKEND][2024-04-20 23:26:31] File “/baserow/backend/src/baserow/api/decorators.py”, line 105, in func_wrapper

[BACKEND][2024-04-20 23:26:31] return func(*args, **kwargs)

[BACKEND][2024-04-20 23:26:31] File “/baserow/backend/src/baserow/api/decorators.py”, line 340, in func_wrapper

[BACKEND][2024-04-20 23:26:31] return func(*args, **kwargs)

[BACKEND][2024-04-20 23:26:31] File “/baserow/backend/src/baserow/api/decorators.py”, line 170, in func_wrapper

[BACKEND][2024-04-20 23:26:31] return func(args, **kwargs)

[BACKEND][2024-04-20 23:26:31] File “/baserow/backend/src/baserow/contrib/database/api/views/grid/views.py”, line 379, in get

[BACKEND][2024-04-20 23:26:31] page = paginator.paginate_queryset(queryset, request, self)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/rest_framework/pagination.py”, line 387, in paginate_queryset

[BACKEND][2024-04-20 23:26:31] self.count = self.get_count(queryset)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/rest_framework/pagination.py”, line 525, in get_count

[BACKEND][2024-04-20 23:26:31] return queryset.count()

[BACKEND][2024-04-20 23:26:31] File “/baserow/backend/src/baserow/contrib/database/table/models.py”, line 161, in count

[BACKEND][2024-04-20 23:26:31] return super().count()

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/models/query.py”, line 621, in count

[BACKEND][2024-04-20 23:26:31] return self.query.get_count(using=self.db)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/models/sql/query.py”, line 559, in get_count

[BACKEND][2024-04-20 23:26:31] return obj.get_aggregation(using, [“__count”])[“__count”]

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/models/sql/query.py”, line 544, in get_aggregation

[BACKEND][2024-04-20 23:26:31] result = compiler.execute_sql(SINGLE)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/models/sql/compiler.py”, line 1398, in execute_sql

[BACKEND][2024-04-20 23:26:31] cursor.execute(sql, params)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/backends/utils.py”, line 67, in execute

[BACKEND][2024-04-20 23:26:31] return self._execute_with_wrappers(

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/backends/utils.py”, line 80, in _execute_with_wrappers

[BACKEND][2024-04-20 23:26:31] return executor(sql, params, many, context)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/backends/utils.py”, line 89, in _execute

[BACKEND][2024-04-20 23:26:31] return self.cursor.execute(sql, params)

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/utils.py”, line 91, in exit

[BACKEND][2024-04-20 23:26:31] raise dj_exc_value.with_traceback(traceback) from exc_value

[BACKEND][2024-04-20 23:26:31] File “/baserow/venv/lib/python3.9/site-packages/django/db/backends/utils.py”, line 89, in _execute

[BACKEND][2024-04-20 23:26:31] return self.cursor.execute(sql, params)

[BACKEND][2024-04-20 23:26:31] django.db.utils.ProgrammingError: relation “database_table_557” does not exist

[BACKEND][2024-04-20 23:26:31] LINE 1: SELECT COUNT() AS “__count” FROM “database_table_557” WHERE…

[BACKEND][2024-04-20 23:26:31] ^

[BACKEND][2024-04-20 23:26:31]

[BACKEND][2024-04-20 23:26:31] 10.21.0.195:0 - “GET /api/database/views/grid/2273/?limit=80&offset=0&include=field_options%2Crow_metadata HTTP/1.1” 500

[BACKEND][2024-04-20 23:26:39] [2024-04-20 23:26:31 +0000] [399] [INFO] connection closed