How have you self-hosted Baserow.

Docker Desktop (WIN10) w/ 1.25.1 w/ standalone Postgresql

What are the specs of the service or server you are using to host Baserow.

24GB RAM for Docker, Intel i7 (6 cores)

Which version of Baserow are you using.

[baserow/baserow:1.25.1]

How have you configured your self-hosted installation?

Stock install w/ standalone Postgresql 15 on the same machine

What commands if any did you use to start your Baserow server?

docker run -d --name baserow -e BASEROW_PUBLIC_URL=http://localhost:3001 -e DATABASE_HOST=host.docker.internal -e DATABASE_NAME=baserowDB -e DATABASE_USER=postgres -e DATABASE_PASSWORD=xxxx -e DATABASE_PORT=5432 -v baserow_data:/baserow/data -p 3001:80 --restart unless-stopped baserow/baserow:1.25.1

Describe the problem

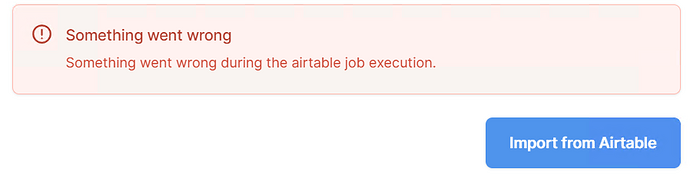

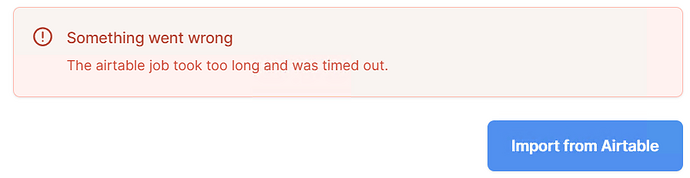

Fails to import AirTable database when the size of attachments is too large.

raise LargeZipFile(requires_zip64 + zipfile.LargeZipFile: Central directory offset would require ZIP64 extensions

Describe, step by step, how to reproduce the error or problem you are encountering.

- Create new database / import from AirTable (beta)

- Enter Url

- Click “Import from Airtable”

QUESTION#1: Is there an easy workaround for this? Can I add the ZIP64 Python library to the Docker setup?

QUESTION#2: What about importing in pieces then reassembling?

QUESTION#3: What about if I write a new importer in Javascript that uses the API to add the rows and files?

I could make it even better so that it is a sync/merge deal so that Baserow is a viable backup for AirTable customers to run in parallel before dumpting AirTable all toghether.

SUGGESTIONS?

Provide screenshots or include share links showing:

How many rows in total do you have in your Baserow tables?

100k rows w/ 100GB of attachments

Please attach full logs from all of Baserow’s services

2024-06-04 12:16:29 [EXPORT_WORKER][2024-06-04 18:16:29] Arguments: {‘hostname’: ‘export-worker@5229a2bd2ad6’, ‘id’: ‘7b56fe5c-59ee-4fd5-9bf2-5433d5e9a4f1’, ‘name’: ‘baserow.core.jobs.tasks.run_async_job’, ‘exc’: “LargeZipFile(‘Central directory offset would require ZIP64 extensions’)”, ‘traceback’: ‘Traceback (most recent call last):\n File “/baserow/backend/src/baserow/contrib/database/airtable/handler.py”, line 331, in download_files_as_zip\n files_zip.writestr(file_name, response.content)\n File “/usr/lib/python3.11/zipfile.py”, line 1830, in writestr\n with self.open(zinfo, mode='w') as dest:\n ^^^^^^^^^^^^^^^^^^^^^^^^^^\n File “/usr/lib/python3.11/zipfile.py”, line 1547, in open\n return self._open_to_write(zinfo, force_zip64=force_zip64)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File “/usr/lib/python3.11/zipfile.py”, line 1641, in _open_to_write\n self._writecheck(zinfo)\n File “/usr/lib/python3.11/zipfile.py”, line 1756, in _writecheck\n raise LargeZipFile(requires_zip64 +\nzipfile.LargeZipFile: Zipfile size would require ZIP64 extensions\n\nDuring handling of the above exception, another exception occurred:\n\nTraceback (most recent call last):\n File “/baserow/venv/lib/python3.11/site-packages/celery/app/trace.py”, line 451, in trace_task\n R = retval = fun(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^\n File “/baserow/venv/lib/python3.11/site-packages/celery/app/trace.py”, line 734, in protected_call\n return self.run(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^^^^\n File “/baserow/backend/src/baserow/core/jobs/tasks.py”, line 34, in run_async_job\n JobHandler().run(job)\n File “/baserow/backend/src/baserow/core/jobs/handler.py”, line 59, in run\n return job_type.run(job, progress)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File “/baserow/backend/src/baserow/contrib/database/airtable/job_types.py”, line 118, in run\n ).do(\n ^^^\n File “/baserow/backend/src/baserow/contrib/database/airtable/actions.py”, line 59, in do\n database = AirtableHandler.import_from_airtable_to_workspace(\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File “/baserow/backend/src/baserow/contrib/database/airtable/handler.py”, line 621, in import_from_airtable_to_workspace\n baserow_database_export, files_buffer = cls.to_baserow_database_export(\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File “/baserow/backend/src/baserow/contrib/database/airtable/handler.py”, line 538, in to_baserow_database_export\n user_files_zip = cls.download_files_as_zip(\n ^^^^^^^^^^^^^^^^^^^^^^^^^^\n File “/baserow/backend/src/baserow/contrib/database/airtable/handler.py”, line 328, in download_files_as_zip\n with ZipFile(files_buffer, “a”, ZIP_DEFLATED, False) as files_zip:\n File “/usr/lib/python3.11/zipfile.py”, line 1342, in exit\n self.close()\n File “/usr/lib/python3.11/zipfile.py”, line 1888, in close\n self._write_end_record()\n File “/usr/lib/python3.11/zipfile.py”, line 1963, in _write_end_record\n raise LargeZipFile(requires_zip64 +\nzipfile.LargeZipFile: Central directory offset would require ZIP64 extensions\n’, ‘args’: ‘[10]’, ‘kwargs’: ‘{}’, ‘description’: ‘raised unexpected’, ‘internal’: False}