Hi @nigel, thanks for reaching out to me.

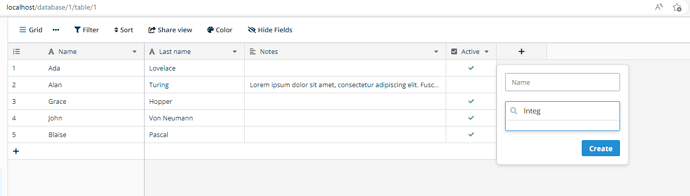

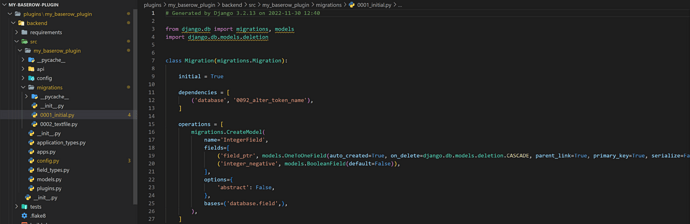

First of all, yes, I ran the migration script and I also can see new files under the backend’s migrations folder:

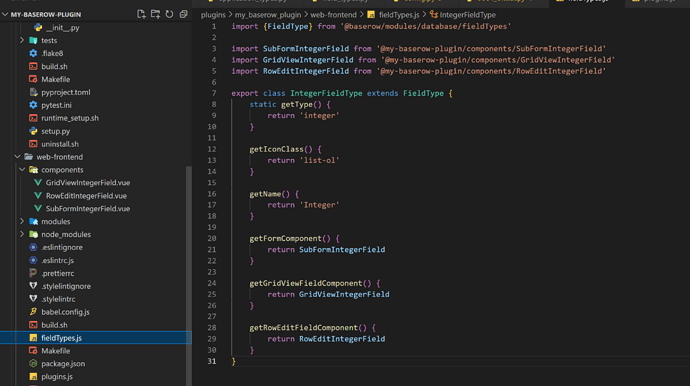

Second, yes, I finished the fontend part as well, I added the components as well as other parts, see here:

Last, but not least, after I ran the migrate command (that you provided) the whole docker image became corrupt due to postgre db issue. Here are the logs:

=========================================================================================

██████╗ █████╗ ███████╗███████╗██████╗ ██████╗ ██╗ ██╗

██╔══██╗██╔══██╗██╔════╝██╔════╝██╔══██╗██╔═══██╗██║ ██║

██████╔╝███████║███████╗█████╗ ██████╔╝██║ ██║██║ █╗ ██║

██╔══██╗██╔══██║╚════██║██╔══╝ ██╔══██╗██║ ██║██║███╗██║

██████╔╝██║ ██║███████║███████╗██║ ██║╚██████╔╝╚███╔███╔╝

╚═════╝ ╚═╝ ╚═╝╚══════╝╚══════╝╚═╝ ╚═╝ ╚═════╝ ╚══╝╚══╝

Version 1.13.1

=========================================================================================

Welcome to Baserow. See https://baserow.io/installation/install-with-docker/ for detailed instructions on

how to use this Docker image.

[STARTUP][2022-12-01 18:56:26] Running setup of embedded baserow database. e(B

[POSTGRES_INIT][2022-12-01 18:56:26] Becoming postgres superuser to run setup SQL commands: e(B

[POSTGRES_INIT][2022-12-01 18:56:26] e(B

[POSTGRES_INIT][2022-12-01 18:56:26] PostgreSQL Database directory appears to contain a database; Skipping initialization e(B

[POSTGRES_INIT][2022-12-01 18:56:26] e(B

[STARTUP][2022-12-01 18:56:26] No BASEROW_PUBLIC_URL environment variable provided. Starting baserow locally at http://localhost without automatic https. e(B

[PLUGIN][SETUP] Found a plugin in /baserow/data/plugins/my_baserow_plugin/, ensuring it is installed...e(B

[PLUGIN][my_baserow_plugin] Found a backend app for my_baserow_plugin.e(B

[PLUGIN][my_baserow_plugin] Skipping install of my_baserow_plugin's backend app as it is already installed.e(B

[PLUGIN][my_baserow_plugin] Skipping runtime setup of my_baserow_plugin's backend app.e(B

[PLUGIN][my_baserow_plugin] Found a web-frontend module for my_baserow_plugin.e(B

[PLUGIN][my_baserow_plugin] Skipping build of my_baserow_plugin web-frontend module as it has already been built.e(B

[PLUGIN][my_baserow_plugin] Skipping runtime setup of my_baserow_plugin's web-frontend module.e(B

[PLUGIN][my_baserow_plugin] Fixing ownership of plugins from 0 to baserow_docker_user in /baserow/data/pluginse(B

[PLUGIN][my_baserow_plugin] Finished setting up my_baserow_plugin successfully.e(B

[STARTUP][2022-12-01 18:56:27] Starting all Baserow processes: e(B

2022-12-01 18:56:27,391 CRIT Supervisor is running as root. Privileges were not dropped because no user is specified in the config file. If you intend to run as root, you can set user=root in the config file to avoid this message.

2022-12-01 18:56:27,391 CRIT Supervisor is running as root. Privileges were not dropped because no user is specified in the config file. If you intend to run as root, you can set user=root in the config file to avoid this message.

2022-12-01 18:56:27,391 INFO Included extra file "/baserow/supervisor/includes/enabled/embedded-postgres.conf" during parsing

2022-12-01 18:56:27,391 INFO Included extra file "/baserow/supervisor/includes/enabled/embedded-postgres.conf" during parsing

2022-12-01 18:56:27,391 INFO Included extra file "/baserow/supervisor/includes/enabled/embedded-redis.conf" during parsing

2022-12-01 18:56:27,391 INFO Included extra file "/baserow/supervisor/includes/enabled/embedded-redis.conf" during parsing

2022-12-01 18:56:27,393 INFO supervisord started with pid 1

2022-12-01 18:56:27,393 INFO supervisord started with pid 1

2022-12-01 18:56:28,395 INFO spawned: 'processes' with pid 187

2022-12-01 18:56:28,395 INFO spawned: 'processes' with pid 187

2022-12-01 18:56:28,396 INFO spawned: 'baserow-watcher' with pid 188

2022-12-01 18:56:28,396 INFO spawned: 'baserow-watcher' with pid 188

2022-12-01 18:56:28,397 INFO spawned: 'caddy' with pid 189

2022-12-01 18:56:28,397 INFO spawned: 'caddy' with pid 189

2022-12-01 18:56:28,398 INFO spawned: 'postgresql' with pid 190

2022-12-01 18:56:28,398 INFO spawned: 'postgresql' with pid 190

2022-12-01 18:56:28,400 INFO spawned: 'redis' with pid 191

2022-12-01 18:56:28,400 INFO spawned: 'redis' with pid 191

2022-12-01 18:56:28,401 INFO spawned: 'backend' with pid 193

2022-12-01 18:56:28,401 INFO spawned: 'backend' with pid 193

2022-12-01 18:56:28,403 INFO spawned: 'celeryworker' with pid 198

2022-12-01 18:56:28,403 INFO spawned: 'celeryworker' with pid 198

2022-12-01 18:56:28,409 INFO spawned: 'exportworker' with pid 225

2022-12-01 18:56:28,409 INFO spawned: 'exportworker' with pid 225

2022-12-01 18:56:28,411 INFO spawned: 'webfrontend' with pid 234

2022-12-01 18:56:28,411 INFO spawned: 'webfrontend' with pid 234

2022-12-01 18:56:28,412 INFO spawned: 'beatworker' with pid 237

2022-12-01 18:56:28,412 INFO spawned: 'beatworker' with pid 237

2022-12-01 18:56:28,413 INFO reaped unknown pid 185 (exit status 0)

2022-12-01 18:56:28,413 INFO reaped unknown pid 185 (exit status 0)

[REDIS][2022-12-01 18:56:28] 191:C 01 Dec 2022 18:56:28.453 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo e(B

[REDIS][2022-12-01 18:56:28] 191:C 01 Dec 2022 18:56:28.453 # Redis version=6.0.16, bits=64, commit=00000000, modified=0, pid=191, just started e(B

[REDIS][2022-12-01 18:56:28] 191:C 01 Dec 2022 18:56:28.453 # Configuration loaded e(B

[REDIS][2022-12-01 18:56:28] 191:M 01 Dec 2022 18:56:28.458 * Running mode=standalone, port=6379. e(B

[REDIS][2022-12-01 18:56:28] 191:M 01 Dec 2022 18:56:28.458 # Server initialized e(B

[REDIS][2022-12-01 18:56:28] 191:M 01 Dec 2022 18:56:28.458 # WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect. e(B

[REDIS][2022-12-01 18:56:28] 191:M 01 Dec 2022 18:56:28.458 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo madvise > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled (set to 'madvise' or 'never'). e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.502 UTC [190] LOG: listening on IPv4 address "127.0.0.1", port 5432 e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.502 UTC [190] LOG: could not bind IPv6 address "::1": Cannot assign requested address e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.502 UTC [190] HINT: Is another postmaster already running on port 5432? If not, wait a few seconds and retry. e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.511 UTC [190] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432" e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.532 UTC [402] LOG: database system was shut down at 2022-11-30 14:50:50 UTC e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.533 UTC [402] LOG: invalid resource manager ID 105 at 0/3180AC0 e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.533 UTC [402] LOG: invalid primary checkpoint record e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.533 UTC [402] PANIC: could not locate a valid checkpoint record e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.541 UTC [190] LOG: startup process (PID 402) was terminated by signal 6: Aborted e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.541 UTC [190] LOG: aborting startup due to startup process failure e(B

[POSTGRES][2022-12-01 18:56:28] 2022-12-01 18:56:28.542 UTC [190] LOG: database system is shut down e(B

2022-12-01 18:56:28,549 INFO exited: postgresql (exit status 1; not expected)

2022-12-01 18:56:28,549 INFO exited: postgresql (exit status 1; not expected)

[BACKEND][2022-12-01 18:56:28] Error: Failed to connect to the postgresql database at localhost e(B

[BACKEND][2022-12-01 18:56:28] Please see the error below for more details: e(B

[BACKEND][2022-12-01 18:56:28] connection to server at "localhost" (127.0.0.1), port 5432 failed: FATAL: the database system is starting up e(B

[BACKEND][2022-12-01 18:56:28] e(B

[CADDY][2022-12-01 18:56:28] {"level":"info","ts":1669920988.5674763,"msg":"using provided configuration","config_file":"/baserow/caddy/Caddyfile","config_adapter":""} e(B

2022-12-01 18:56:28,570 INFO reaped unknown pid 278 (exit status 141)

2022-12-01 18:56:28,570 INFO reaped unknown pid 278 (exit status 141)

2022-12-01 18:56:28,570 INFO reaped unknown pid 403 (exit status 1)

2022-12-01 18:56:28,570 INFO reaped unknown pid 403 (exit status 1)

[CADDY][2022-12-01 18:56:28] {"level":"warn","ts":1669920988.570174,"msg":"input is not formatted with 'caddy fmt'","adapter":"caddyfile","file":"/baserow/caddy/Caddyfile","line":2} e(B

[CADDY][2022-12-01 18:56:28] {"level":"info","ts":1669920988.571802,"logger":"admin","msg":"admin endpoint started","address":"tcp/localhost:2019","enforce_origin":false,"origins":["[::1]:2019","127.0.0.1:2019","localhost:2019"]} e(B

[CADDY][2022-12-01 18:56:28] {"level":"info","ts":1669920988.5721884,"logger":"http","msg":"server is listening only on the HTTP port, so no automatic HTTPS will be applied to this server","server_name":"srv0","http_port":80} e(B

[CADDY][2022-12-01 18:56:28] {"level":"info","ts":1669920988.5728242,"logger":"tls.cache.maintenance","msg":"started background certificate maintenance","cache":"0xc0000d89a0"} e(B

[CADDY][2022-12-01 18:56:28] {"level":"info","ts":1669920988.5737472,"logger":"tls","msg":"cleaning storage unit","description":"FileStorage:/baserow/data/caddy/data/caddy"} e(B

[CADDY][2022-12-01 18:56:28] {"level":"info","ts":1669920988.5738244,"logger":"tls","msg":"finished cleaning storage units"} e(B

[CADDY][2022-12-01 18:56:28] {"level":"info","ts":1669920988.5742636,"msg":"autosaved config (load with --resume flag)","file":"/baserow/data/caddy/config/caddy/autosave.json"} e(B

[WEBFRONTEND][2022-12-01 18:56:28] yarn run v1.22.19 e(B

[WEBFRONTEND][2022-12-01 18:56:29] $ nuxt --hostname 0.0.0.0 e(B

2022-12-01 18:56:29,560 INFO success: processes entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2022-12-01 18:56:29,560 INFO success: processes entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2022-12-01 18:56:29,561 INFO success: baserow-watcher entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2022-12-01 18:56:29,561 INFO success: baserow-watcher entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2022-12-01 18:56:29,561 INFO spawned: 'postgresql' with pid 427

2022-12-01 18:56:29,561 INFO spawned: 'postgresql' with pid 427

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.593 UTC [427] LOG: listening on IPv4 address "127.0.0.1", port 5432 e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.593 UTC [427] LOG: could not bind IPv6 address "::1": Cannot assign requested address e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.593 UTC [427] HINT: Is another postmaster already running on port 5432? If not, wait a few seconds and retry. e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.602 UTC [427] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432" e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.620 UTC [438] LOG: database system was shut down at 2022-11-30 14:50:50 UTC e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.620 UTC [438] LOG: invalid resource manager ID 105 at 0/3180AC0 e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.620 UTC [438] LOG: invalid primary checkpoint record e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.620 UTC [438] PANIC: could not locate a valid checkpoint record e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.627 UTC [427] LOG: startup process (PID 438) was terminated by signal 6: Aborted e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.627 UTC [427] LOG: aborting startup due to startup process failure e(B

[POSTGRES][2022-12-01 18:56:29] 2022-12-01 18:56:29.628 UTC [427] LOG: database system is shut down e(B

2022-12-01 18:56:29,629 INFO exited: postgresql (exit status 1; not expected)

2022-12-01 18:56:29,629 INFO exited: postgresql (exit status 1; not expected)

2022-12-01 18:56:29,630 INFO reaped unknown pid 436 (exit status 0)

2022-12-01 18:56:29,630 INFO reaped unknown pid 436 (exit status 0)

[WEBFRONTEND][2022-12-01 18:56:30] Loading extra plugin modules: /baserow/data/plugins/my_baserow_plugin/web-frontend/modules/my-baserow-plugin/module.js e(B

[BACKEND][2022-12-01 18:56:30] Waiting for PostgreSQL to become available attempt 0/5 ... e(B

[BACKEND][2022-12-01 18:56:30] Error: Failed to connect to the postgresql database at localhost e(B

[BACKEND][2022-12-01 18:56:30] Please see the error below for more details: e(B

[BACKEND][2022-12-01 18:56:30] connection to server at "localhost" (127.0.0.1), port 5432 failed: Connection refused e(B

[BACKEND][2022-12-01 18:56:30] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:30] connection to server at "localhost" (::1), port 5432 failed: Cannot assign requested address e(B

[BACKEND][2022-12-01 18:56:30] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:30] e(B

[WEBFRONTEND][2022-12-01 18:56:31] ℹ Listening on: http://172.22.0.2:3000/ e(B

[WEBFRONTEND][2022-12-01 18:56:31] ℹ Preparing project for development e(B

[WEBFRONTEND][2022-12-01 18:56:31] ℹ Initial build may take a while e(B

[WEBFRONTEND][2022-12-01 18:56:31] e(B

[WEBFRONTEND][2022-12-01 18:56:31] WARN No pages directory found in /baserow/web-frontend. Using the default built-in page. e(B

[WEBFRONTEND][2022-12-01 18:56:31] e(B

[WEBFRONTEND][2022-12-01 18:56:31] ✔ Builder initialized e(B

2022-12-01 18:56:32,338 INFO spawned: 'postgresql' with pid 445

2022-12-01 18:56:32,338 INFO spawned: 'postgresql' with pid 445

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.379 UTC [445] LOG: listening on IPv4 address "127.0.0.1", port 5432 e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.379 UTC [445] LOG: could not bind IPv6 address "::1": Cannot assign requested address e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.379 UTC [445] HINT: Is another postmaster already running on port 5432? If not, wait a few seconds and retry. e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.388 UTC [445] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432" e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.408 UTC [456] LOG: database system was shut down at 2022-11-30 14:50:50 UTC e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.408 UTC [456] LOG: invalid resource manager ID 105 at 0/3180AC0 e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.408 UTC [456] LOG: invalid primary checkpoint record e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.408 UTC [456] PANIC: could not locate a valid checkpoint record e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.415 UTC [445] LOG: startup process (PID 456) was terminated by signal 6: Aborted e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.415 UTC [445] LOG: aborting startup due to startup process failure e(B

[POSTGRES][2022-12-01 18:56:32] 2022-12-01 18:56:32.416 UTC [445] LOG: database system is shut down e(B

2022-12-01 18:56:32,417 INFO exited: postgresql (exit status 1; not expected)

2022-12-01 18:56:32,417 INFO exited: postgresql (exit status 1; not expected)

2022-12-01 18:56:32,417 INFO reaped unknown pid 454 (exit status 0)

2022-12-01 18:56:32,417 INFO reaped unknown pid 454 (exit status 0)

[WEBFRONTEND][2022-12-01 18:56:32] ✔ Nuxt files generated e(B

[WEBFRONTEND][2022-12-01 18:56:32] ℹ Compiling Client e(B

[BACKEND][2022-12-01 18:56:32] Waiting for PostgreSQL to become available attempt 1/5 ... e(B

[BACKEND][2022-12-01 18:56:32] Error: Failed to connect to the postgresql database at localhost e(B

[BACKEND][2022-12-01 18:56:32] Please see the error below for more details: e(B

[BACKEND][2022-12-01 18:56:32] connection to server at "localhost" (127.0.0.1), port 5432 failed: Connection refused e(B

[BACKEND][2022-12-01 18:56:32] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:32] connection to server at "localhost" (::1), port 5432 failed: Cannot assign requested address e(B

[BACKEND][2022-12-01 18:56:32] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:32] e(B

[WEBFRONTEND][2022-12-01 18:56:32] ℹ Compiling Server e(B

[WEBFRONTEND][2022-12-01 18:56:32] e(B

[WEBFRONTEND][2022-12-01 18:56:32] WARN Browserslist: caniuse-lite is outdated. Please run: e(B

[WEBFRONTEND][2022-12-01 18:56:32] npx browserslist@latest --update-db e(B

[WEBFRONTEND][2022-12-01 18:56:32] e(B

[WEBFRONTEND][2022-12-01 18:56:32] Why you should do it regularly: e(B

[WEBFRONTEND][2022-12-01 18:56:32] https://github.com/browserslist/browserslist#browsers-data-updating e(B

[BACKEND][2022-12-01 18:56:34] Waiting for PostgreSQL to become available attempt 2/5 ... e(B

[BACKEND][2022-12-01 18:56:34] Error: Failed to connect to the postgresql database at localhost e(B

[BACKEND][2022-12-01 18:56:34] Please see the error below for more details: e(B

[BACKEND][2022-12-01 18:56:34] connection to server at "localhost" (127.0.0.1), port 5432 failed: Connection refused e(B

[BACKEND][2022-12-01 18:56:34] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:34] connection to server at "localhost" (::1), port 5432 failed: Cannot assign requested address e(B

[BACKEND][2022-12-01 18:56:34] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:34] e(B

2022-12-01 18:56:35,721 INFO spawned: 'postgresql' with pid 461

2022-12-01 18:56:35,721 INFO spawned: 'postgresql' with pid 461

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.762 UTC [461] LOG: listening on IPv4 address "127.0.0.1", port 5432 e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.762 UTC [461] LOG: could not bind IPv6 address "::1": Cannot assign requested address e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.762 UTC [461] HINT: Is another postmaster already running on port 5432? If not, wait a few seconds and retry. e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.770 UTC [461] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432" e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.791 UTC [472] LOG: database system was shut down at 2022-11-30 14:50:50 UTC e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.791 UTC [472] LOG: invalid resource manager ID 105 at 0/3180AC0 e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.791 UTC [472] LOG: invalid primary checkpoint record e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.791 UTC [472] PANIC: could not locate a valid checkpoint record e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.797 UTC [461] LOG: startup process (PID 472) was terminated by signal 6: Aborted e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.797 UTC [461] LOG: aborting startup due to startup process failure e(B

[POSTGRES][2022-12-01 18:56:35] 2022-12-01 18:56:35.798 UTC [461] LOG: database system is shut down e(B

2022-12-01 18:56:35,800 INFO exited: postgresql (exit status 1; not expected)

2022-12-01 18:56:35,800 INFO exited: postgresql (exit status 1; not expected)

2022-12-01 18:56:35,800 INFO reaped unknown pid 470 (exit status 0)

2022-12-01 18:56:35,800 INFO reaped unknown pid 470 (exit status 0)

2022-12-01 18:56:35,800 INFO gave up: postgresql entered FATAL state, too many start retries too quickly

2022-12-01 18:56:35,800 INFO gave up: postgresql entered FATAL state, too many start retries too quickly

[BACKEND][2022-12-01 18:56:36] Waiting for PostgreSQL to become available attempt 3/5 ... e(B

[BACKEND][2022-12-01 18:56:36] Error: Failed to connect to the postgresql database at localhost e(B

[BACKEND][2022-12-01 18:56:36] Please see the error below for more details: e(B

[BACKEND][2022-12-01 18:56:36] connection to server at "localhost" (127.0.0.1), port 5432 failed: Connection refused e(B

[BACKEND][2022-12-01 18:56:36] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:36] connection to server at "localhost" (::1), port 5432 failed: Cannot assign requested address e(B

[BACKEND][2022-12-01 18:56:36] Is the server running on that host and accepting TCP/IP connections? e(B

Baserow was stopped or one of it's services crashed, see the logs above for more details.

[BACKEND][2022-12-01 18:56:36] e(B

2022-12-01 18:56:36,768 WARN received SIGTERM indicating exit request

2022-12-01 18:56:36,768 WARN received SIGTERM indicating exit request

2022-12-01 18:56:36,768 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

2022-12-01 18:56:36,768 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

[BACKEND][2022-12-01 18:56:38] Waiting for PostgreSQL to become available attempt 4/5 ... e(B

[BACKEND][2022-12-01 18:56:38] Error: Failed to connect to the postgresql database at localhost e(B

[BACKEND][2022-12-01 18:56:38] Please see the error below for more details: e(B

[BACKEND][2022-12-01 18:56:38] connection to server at "localhost" (127.0.0.1), port 5432 failed: Connection refused e(B

[BACKEND][2022-12-01 18:56:38] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:38] connection to server at "localhost" (::1), port 5432 failed: Cannot assign requested address e(B

[BACKEND][2022-12-01 18:56:38] Is the server running on that host and accepting TCP/IP connections? e(B

[BACKEND][2022-12-01 18:56:38] e(B

2022-12-01 18:56:39,818 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

2022-12-01 18:56:39,818 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

[BACKEND][2022-12-01 18:56:40] Waiting for PostgreSQL to become available attempt 5/5 ... e(B

[BACKEND][2022-12-01 18:56:40] PostgreSQL did not become available in time... e(B

2022-12-01 18:56:40,818 INFO exited: backend (exit status 1; not expected)

2022-12-01 18:56:40,818 INFO exited: backend (exit status 1; not expected)

2022-12-01 18:56:40,818 INFO reaped unknown pid 250 (exit status 0)

2022-12-01 18:56:40,818 INFO reaped unknown pid 250 (exit status 0)

2022-12-01 18:56:42,821 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

2022-12-01 18:56:42,821 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

[BEAT_WORKER][2022-12-01 18:56:44] Sleeping for 15 before starting beat to prevent startup errors. e(B

[BEAT_WORKER][2022-12-01 18:56:44] Loaded backend plugins: my_baserow_plugin e(B

[BEAT_WORKER][2022-12-01 18:56:44] WARNING: Baserow is configured to use a BASEROW_PUBLIC_URL of http://localhost. If you attempt to access Baserow on any other hostname requests to the backend will fail as they will be from an unknown host. Please set BASEROW_PUBLIC_URL if you will be accessing Baserow from any other URL then http://localhost. e(B

2022-12-01 18:56:46,394 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

2022-12-01 18:56:46,394 INFO waiting for processes, baserow-watcher, caddy, redis, backend, celeryworker, exportworker, webfrontend, beatworker to die

[BEAT_WORKER][2022-12-01 18:56:46] celery beat v5.2.3 (dawn-chorus) is starting. e(B

[BEAT_WORKER][2022-12-01 18:56:46] __ - ... __ - _ e(B

[BEAT_WORKER][2022-12-01 18:56:46] LocalTime -> 2022-12-01 18:56:46 e(B

[BEAT_WORKER][2022-12-01 18:56:46] Configuration -> e(B

[BEAT_WORKER][2022-12-01 18:56:46] . broker -> redis://:**@localhost:6379/0 e(B

[BEAT_WORKER][2022-12-01 18:56:46] . loader -> celery.loaders.app.AppLoader e(B

[BEAT_WORKER][2022-12-01 18:56:46] . scheduler -> redbeat.schedulers.RedBeatScheduler e(B

[BEAT_WORKER][2022-12-01 18:56:46] . redis -> redis://:**@localhost:6379/0 e(B

[BEAT_WORKER][2022-12-01 18:56:46] . lock -> `redbeat::lock` 1.33 minutes (80s) e(B

[BEAT_WORKER][2022-12-01 18:56:46] . logfile -> [stderr]@%INFO e(B

[BEAT_WORKER][2022-12-01 18:56:46] . maxinterval -> 20.00 seconds (20s) e(B

2022-12-01 18:56:47,753 WARN killing 'beatworker' (237) with SIGKILL

2022-12-01 18:56:47,753 WARN killing 'beatworker' (237) with SIGKILL

[BEAT_WORKER][2022-12-01 18:56:47] [2022-12-01 18:56:46,751: INFO/MainProcess] beat: Starting... e(B

2022-12-01 18:56:47,758 INFO stopped: beatworker (terminated by SIGKILL)

2022-12-01 18:56:47,758 INFO stopped: beatworker (terminated by SIGKILL)

2022-12-01 18:56:47,758 INFO reaped unknown pid 304 (exit status 0)

2022-12-01 18:56:47,758 INFO reaped unknown pid 304 (exit status 0)

[BASEROW-WATCHER][2022-12-01 18:56:48] Waiting for Baserow to become available, this might take 30+ seconds... e(B

2022-12-01 18:56:48,470 INFO stopped: webfrontend (exit status 1)

2022-12-01 18:56:48,470 INFO stopped: webfrontend (exit status 1)

2022-12-01 18:56:48,470 INFO reaped unknown pid 419 (terminated by SIGTERM)

2022-12-01 18:56:48,470 INFO reaped unknown pid 419 (terminated by SIGTERM)

[EXPORT_WORKER][2022-12-01 18:56:48] watchmedo auto-restart -d=/baserow/backend/src -d=/baserow/premium/backend/src -d=/baserow/enterprise/backend/src --pattern=*.py --recursive -- bash /baserow/backend/docker/docker-entrypoint.sh celery-exportworker e(B

2022-12-01 18:56:48,470 INFO stopped: exportworker (terminated by SIGTERM)

2022-12-01 18:56:48,470 INFO stopped: exportworker (terminated by SIGTERM)

2022-12-01 18:56:48,471 INFO reaped unknown pid 387 (exit status 0)

2022-12-01 18:56:48,471 INFO reaped unknown pid 387 (exit status 0)

[CELERY_WORKER][2022-12-01 18:56:48] watchmedo auto-restart -d=/baserow/backend/src -d=/baserow/premium/backend/src -d=/baserow/enterprise/backend/src --pattern=*.py --recursive -- bash /baserow/backend/docker/docker-entrypoint.sh celery-worker e(B

2022-12-01 18:56:48,471 INFO stopped: celeryworker (terminated by SIGTERM)

2022-12-01 18:56:48,471 INFO stopped: celeryworker (terminated by SIGTERM)

2022-12-01 18:56:48,471 INFO reaped unknown pid 277 (exit status 0)

2022-12-01 18:56:48,471 INFO reaped unknown pid 277 (exit status 0)

2022-12-01 18:56:48,471 INFO reaped unknown pid 265 (exit status 0)

2022-12-01 18:56:48,471 INFO reaped unknown pid 265 (exit status 0)

2022-12-01 18:56:48,472 INFO reaped unknown pid 369 (exit status 0)

2022-12-01 18:56:48,472 INFO reaped unknown pid 369 (exit status 0)

[REDIS][2022-12-01 18:56:48] 191:M 01 Dec 2022 18:56:28.458 * Ready to accept connections e(B

[REDIS][2022-12-01 18:56:48] 191:signal-handler (1669921008) Received SIGTERM scheduling shutdown... e(B

[REDIS][2022-12-01 18:56:48] 191:M 01 Dec 2022 18:56:48.499 # User requested shutdown... e(B

[REDIS][2022-12-01 18:56:48] 191:M 01 Dec 2022 18:56:48.499 # Redis is now ready to exit, bye bye... e(B

2022-12-01 18:56:48,500 INFO stopped: redis (exit status 0)

2022-12-01 18:56:48,500 INFO stopped: redis (exit status 0)

2022-12-01 18:56:48,500 INFO reaped unknown pid 245 (exit status 0)

2022-12-01 18:56:48,500 INFO reaped unknown pid 245 (exit status 0)

[CADDY][2022-12-01 18:56:48] {"level":"info","ts":1669920988.57429,"msg":"serving initial configuration"} e(B

[CADDY][2022-12-01 18:56:48] {"level":"info","ts":1669921008.5004604,"msg":"shutting down apps, then terminating","signal":"SIGTERM"} e(B

[CADDY][2022-12-01 18:56:48] {"level":"warn","ts":1669921008.500493,"msg":"exiting; byeee!! 👋","signal":"SIGTERM"} e(B

[CADDY][2022-12-01 18:56:48] {"level":"info","ts":1669921008.5017242,"logger":"tls.cache.maintenance","msg":"stopped background certificate maintenance","cache":"0xc0000d89a0"} e(B

[CADDY][2022-12-01 18:56:48] {"level":"info","ts":1669921008.5039456,"logger":"admin","msg":"stopped previous server","address":"tcp/localhost:2019"} e(B

[CADDY][2022-12-01 18:56:48] {"level":"info","ts":1669921008.503974,"msg":"shutdown complete","signal":"SIGTERM","exit_code":0} e(B

2022-12-01 18:56:48,505 INFO stopped: caddy (exit status 0)

2022-12-01 18:56:48,505 INFO stopped: caddy (exit status 0)

2022-12-01 18:56:48,505 INFO reaped unknown pid 226 (exit status 0)

2022-12-01 18:56:48,505 INFO reaped unknown pid 226 (exit status 0)

2022-12-01 18:56:49,506 INFO stopped: baserow-watcher (terminated by SIGTERM)

2022-12-01 18:56:49,506 INFO stopped: baserow-watcher (terminated by SIGTERM)

2022-12-01 18:56:49,506 INFO waiting for processes to die

2022-12-01 18:56:49,506 INFO waiting for processes to die

2022-12-01 18:56:49,507 INFO stopped: processes (terminated by SIGTERM)

2022-12-01 18:56:49,507 INFO stopped: processes (terminated by SIGTERM)

I am not sure why it is not working properly, can you please shed light on that?

cspocsai